Generative Agent Research Papers You Should Read

Research paper in the exciting field that you don’t want to miss.

Image by pikisuperstar on Freepik

Generative Agents is a term coined by Stanford University and Google researchers in their paper called Generative Agents: Interactive Simulacra of Human Behavior (Park et al., 2023). In this paper, the research explains that Generative Agents are computational software that believably simulate human behavior.

In the paper, they introduce how agents could act like what humans would do: writing, cooking, speaking, voting, sleeping, etc., by implementing a generative model, especially the Large Language Model (LLM). The agents can show the capability to make inferences about themselves, other agents, and their environment by harnessing the natural language model.

The researcher constructs a system architecture to store, synthesize, and apply relevant memories to generate believable behavior using a large language model, enabling generative agents. This system constituted of three components, they are:

- Memory stream. The system records the agent’s experiences and is a reference for the agent's future actions.

- Reflection. The system synthesizes the experience into memories for an agent to learn and perform better.

- Planning. The system translates the insight from the previous system into high-level action plans and allows the agent to react to the environment.

These reflections and plan systems work synergistically with the memory stream to influence the agent’s future behavior.

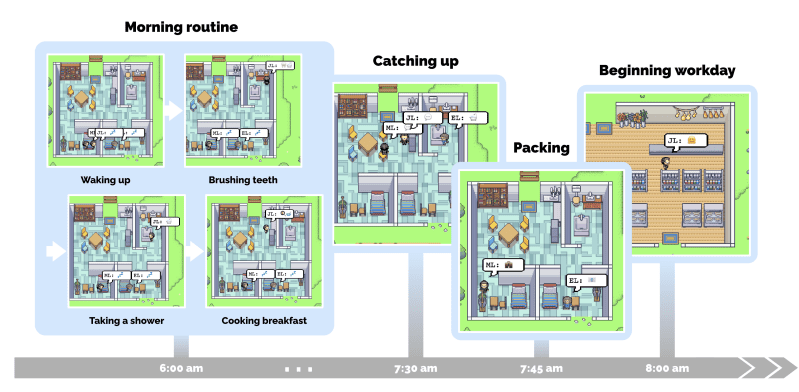

To simulate the system above, the researchers focus on creating an interactive society of agents inspired by the Sims game. The architecture above is connected with the ChatGPT and successfully shows 25 agent interactions within their sandbox. An example of agent activity throughout the day is shown in the image below.

Generative Agent activity and interaction throughout the day (Park et al., 2023)

The whole code to create Generative Agents and simulate them in the sandbox is already made open-source by the researchers, which you can find in the following repository. The direction is simple enough that you can follow them without much problem.

With Generative Agents becoming an exciting field, much research is happening based on this. In this article, we will explore various Generative Agents papers that you should read. What are these? Let’s get into it.

1. Communicative Agents for Software Development

The Communicative Agents for Software Development paper (Quan et al., 2023) is a new approach to revolutionizing software development using the Generative Agents. The premise that researchers propose is how the entire software development process could be streamlined and unified using natural language communication from Large Language Models (LLM). The tasks include developing code, generating the documents, analyzing the requirements, and many more.

The researchers point out that generating an entire software using LLM has two major challenges: hallucination and lack of cross-examination in decision-making. To address these problems, the researchers propose a chat-based software development framework called ChatDev.

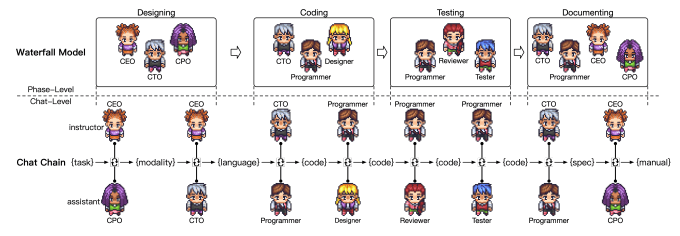

ChatDev framework follows four phases: designing, coding, testing, and documenting. In each phase, the ChatDev would establish several agents with various roles, for example, code reviewers, software programmers, etc. To ensure the communication between agents runs smoothly, the researchers developed a chat chain that divided the phases into sequential atomic subtasks. Each subtask would implement collaboration and interaction between the agents.

The ChatDev framework is shown in the image below.

The proposed ChatDev Framework (Quan et al., 2023)

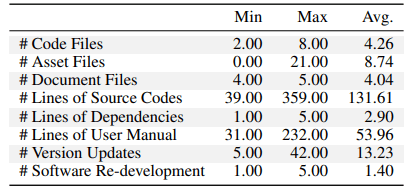

The researchers perform various experiments to measure how the ChatDev framework performs in software development. By using gpt3.5-turbo-16k, below is the software statistics experiment performance.

The ChatDev Framework Software Statistics (Quan et al., 2023)

The above number is a metric on statistical analysis regarding the software systems generated by the ChatDev. For example, 39 lines of code are generated at minimum, with the maximum being 359 codes. The researchers also showed that 86.66% of the software systems generated worked properly.

It’s a great paper that shows the potential to change how developers work. Read the paper further to understand the full implementation of the ChatDev. The full code is also available in the ChatDev repository.

2. AgentVerse: Facilitating Multi-Agent Collaboration and Exploring Emergent Behaviors in Agents

AgentVerse is a framework proposed in the paper by Chen et al., 2023 to simulate the agent groups via the Large Language Model to dynamic problem-solving procedures within the group and adjustment of the group members based on the progression. This study exists to solve the challenge of static group dynamics where the autonomous agent cannot adapt and evolve in solving problems.

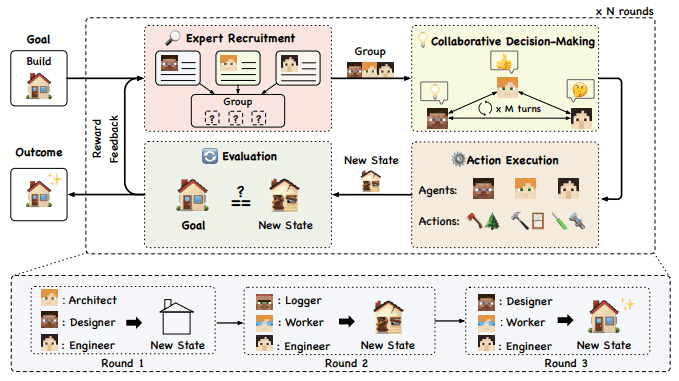

AgentVerse framework tries to split the framework into four steps, including:

- Expert Recruitment: The adjustment phase for agents to align with the problem and solution

- Collaborative Decision-Making: The agents discuss to formulate a solution and strategy to solve the problem.

- Action Execution: The agents execute action in the environment based on the decision.

- Evaluation: The current condition and goals are evaluated. The feedback reward will return to the first step if the goal still needs to be met.

The overall structure of the AgentVerse is shown in the image below.

AgentVerse Framework (Chen et al., 2023)

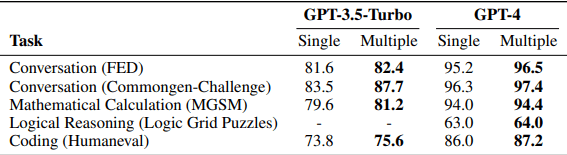

The researchers experimented with the framework and compared the AgentVerse framework to the individual agent solution. The result is presented in the image below.

Performance Analysis of AgentVerse (Chen et al., 2023)

The AgentVerse framework can generally outperform individual agents in all the presented tasks. This proves that generative agents could perform better than individual agents trying to solve problems. You could try out the framework through their repository.

3. AgentSims: An Open-Source Sandbox for Large Language Model Evaluation

Evaluating LLMs' ability is still an open question within the community and the fields. Three points that limit the ability to evaluate LLM properly are limited evaluation abilities by the tasks, vulnerable benchmarks, and unobjective metrics. To handle these problems, Lin et al., 2023 proposed a task-based evaluation as an LLM benchmark in their paper. This approach hoped to become standard in evaluating the LLM's works as it could alleviate all the problems raised. To achieve this, the researchers introduce a framework called AgentSims.

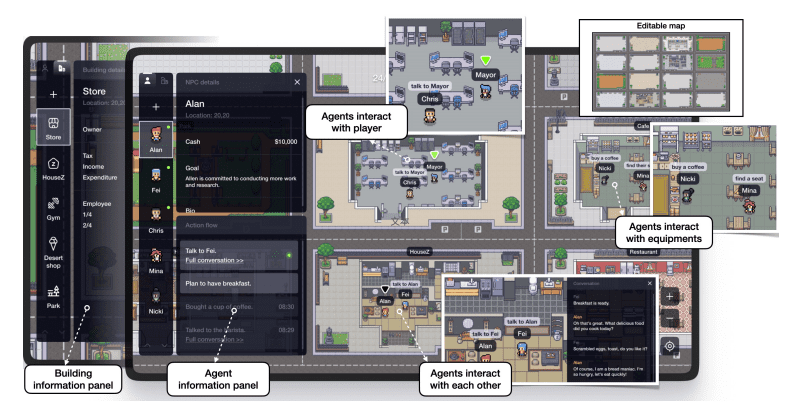

AgentSims is a program with interactive and visualization infrastructure for curating evaluation tasks for LLMs. The overall objective of AgentSims is to provide researchers and experts with a platform to streamline the task design process and use them as an evaluation tool. The front end of the AgentSims is presented in the image below.

AgentSims Front End (Lin et al., 2023)

As the target for AgentSims is everyone who requires LLM evaluation in easier ways, the researchers developed the front end where we can interact with the UI. You can also try the full demo on their website or access the full code in the AgentSims repository.

Conclusion

Generative Agents are a recent approach in the LLMs to simulate human behaviors. The latest research by Park et al., 2023 has shown a great possibility of what the Generative Agents could do. That is why many types of research based on Generative Agents have shown up and opened many new doors.

In this article, we have talked about three different Generative Agents research, including:

- Communicative Agents for Software Development paper (Quan et al., 2023)

- AgentVerse: Facilitating Multi-Agent Collaboration and Exploring Emergent Behaviors in Agents (Chen et al., 2023)

3. AgentSims: An Open-Source Sandbox for Large Language Model Evaluation (Lin et al., 2023)

Cornellius Yudha Wijaya is a data science assistant manager and data writer. While working full-time at Allianz Indonesia, he loves to share Python and Data tips via social media and writing media.