Image from Unsplash by Clément Hélardot

2022 is an excellent year for any data person, especially those who use Python, as there are many exciting packages to improve our data capabilities. Various must-learn Data Python packages in 2022 have been outlined, and we might want something new to improve our stack in the new year.

Facing the year 2023, various Python packages will improve our data workflow in the new year. What are these packages? Let’s take a look at my recommendation.

From the data cleaning packages to machine learning implementation, these are the top data Python packages you want to know in 2023.

1. Pyjanitor

Pyjanitor is an open-source Python package developed specifically for data cleaning routines via method chaining and was designed to improve Pandas API for data cleaning.

We know many Pandas methods for data cleaning, such as dropna to remove all the missing values. With Pyjanitor, the data cleaning process with Pandas API would be heightened by introducing additional methods within the APIs. How is it work? Let’s try out the package with sample data.

We would use the Titanic training data from the Kaggle licenses under CC0: Public Domain for the sample. Let’s start by installing the Pyjanitor package.

Install

pip install pyjanitor

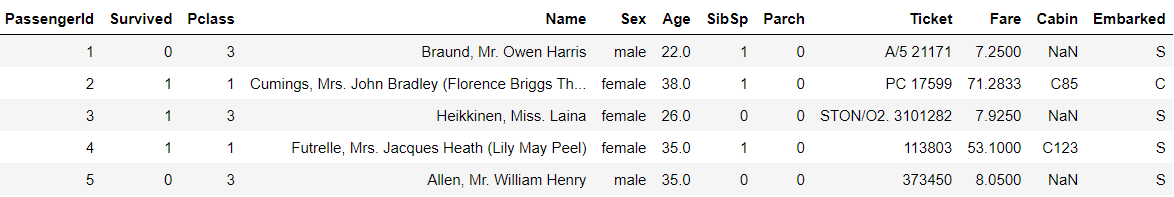

Let’s look at our current dataset before we do any data cleaning with Pyjanitor.

import pandas as pd

df = pd.read_csv('train.csv')

df.head()

Output

Image by Author

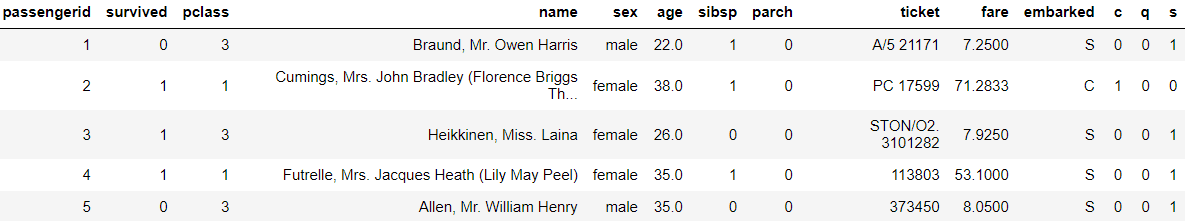

With the Pyjanitor package, we can do various extension data cleaning and implement method chains in how Pandas API works. Let’s see how the package works with the code below.

Code Example

import janitor

df.remove_columns(["Cabin"]).expand_column(column_name = 'Embarked').clean_names()

Output

Image by Author

By importing the Pyjanitor package, it would automatically be implemented within the Pandas DataFrame. In our code above, we have done the following things using Pyjanitor:

- Remove the ‘Cabin’ columns using the remove_columns method,

- Categorical Encoding (One-Hot Encoding) processing to the ‘Embarked’ column using expand_column method,

- Convert all the variable header names to lowercase, and if there are spaces will be replaced with underscores using the clean_names method.

There are still so many functions in Pyjanitor we could use for data cleaning. Please refer to their documentation for a complete API list.

2. Pingouin

Pingouin is a statistical analysis open-source Python package used for any common statistic activity for any data scientist. The package was designed for simplicity by providing a one-liner code but still providing various statistical tests to be used.

Install

pip install pingouin

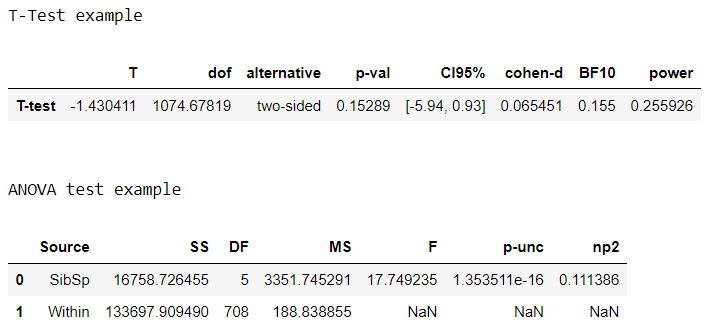

Having installed the package, let’s try to perform statistical analysis with Pingouin. For example, we would do a T-test and ANOVA test using the previous Titanic dataset.

Code Example

import pingouin as pg

#T-Test

print('T-Test example')

pg.ttest( df['Age'], df['Fare'])

print('\n')

# ANOVA test

print('ANOVA test example')

pg.anova(data=df, dv='Age', between='SibSp', detailed=True)

Output

Image by Author

With a single line, Pingouin provide the statistical test result in the data frame object. There are many more functions to help our analysis, which we can explore in the Pingouin APIs documentation.

3. PyCaret

PyCaret is an open-source Python package developed for automating the machine learning workflow. The package provides a low-code environment to hasten the model experiment by delivering an end-to-end machine-learning model tool.

In typical data science work, many activities exist, such as cleaning our data, selecting a model, doing hyperparameter tuning, and evaluating the model. PyCaret intends to eliminate all the hassle by minimizing all the required codes into as few lines as possible. The package is a collection of several machine learning frameworks into one. Let’s try out PyCaret to know more.

Install

pip install pycaret

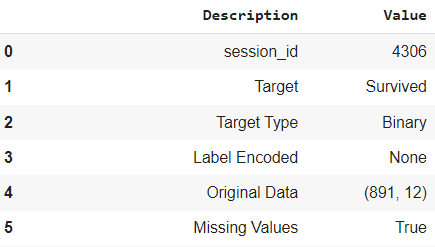

Using the previous Titanic dataset; we would develop a model classifier to predict the “Survive” variable.

Code Example

from pycaret.classification import *

clf_exp = setup(data = df, target = 'Survived')

Output

Image by Author

In the above code, we initiate the experiment using the setup function. By passing the data and the target, PyCaret would infer our data and develop a machine-learning model based on the given data. The actual output information is longer than the above image and is insightful to what happened in our modeling process.

Let’s look at the model result and infer the best model from the training data.

best_model = compare_models(sort = 'precision')

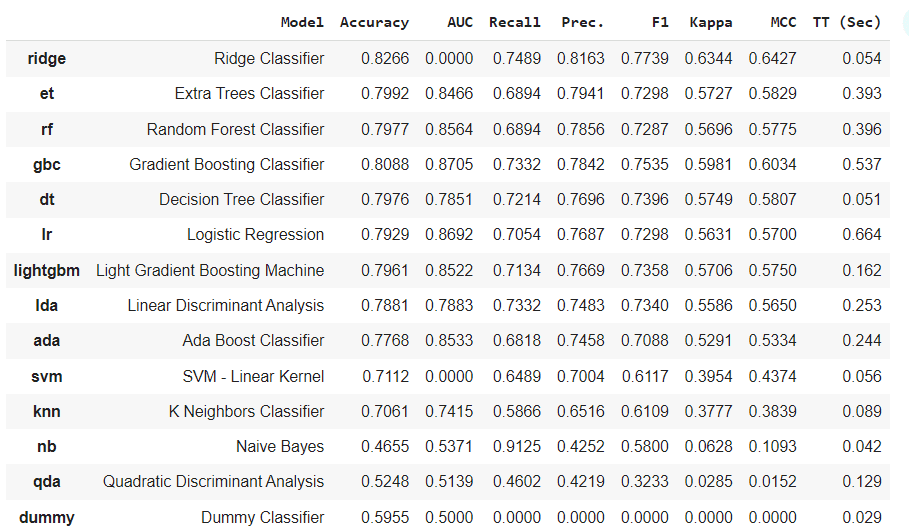

Output

Image by Author

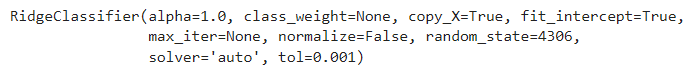

print(best_model)

Output

Image by Author

The PyCaret classifier experiment would test the training data into 14 different classifiers and give the best model. In our case, it is the RidgeClassifier.

There are still many experiments you could do with PyCaret. To explore more, please refer to their documentation.

4. BentoML

BentoML is an open-source Python package for quickly serving the model into production and with as few lines as possible. The package intended to focus on the productional machine learning model to be easily used by the user.

Let’s try out the BentoML package and learn how it works.

Install

pip install bentoml

For the BentoML example, we would use the code from the package tutorial with a little modification.

Code Example

We would train the model classifier using the iris dataset.

from sklearn import svm, datasets

iris = datasets.load_iris()

X, y = iris.data, iris.target

iris_clf = svm.SVC()

iris_clf.fit(X, y)

With BentoML, we could store our machine learning model in the local or cloud model store and retrieve it for production.

import bentoml

bentoml.sklearn.save_model("iris_clf", iris_clf)

Then we could use the stored model in the BentoML environment using the runner instance.

# Create a Runner instance and implement a runner instance in local

iris_clf_runner = bentoml.sklearn.get("iris_clf:latest").to_runner()

iris_clf_runner.init_local()

# Using the predictor on unseen data

iris_clf_runner.predict.run([[4.1, 2.3, 5.5, 1.8]])

Output

array([2])

Next; we could initiate the model service saved in the BentoML by running the following code to create a Python file and start the server.

%%writefile service.py

import numpy as np

import bentoml

from bentoml.io import NumpyNdarray

iris_clf_runner = bentoml.sklearn.get("iris_clf:latest").to_runner()

svc = bentoml.Service("iris_clf_service", runners=[iris_clf_runner])

@svc.api(input=NumpyNdarray(), output=NumpyNdarray())

def classify(input_series: np.ndarray) -> np.ndarray:

return iris_clf_runner.predict.run(input_series)

We start the server by running the code below.

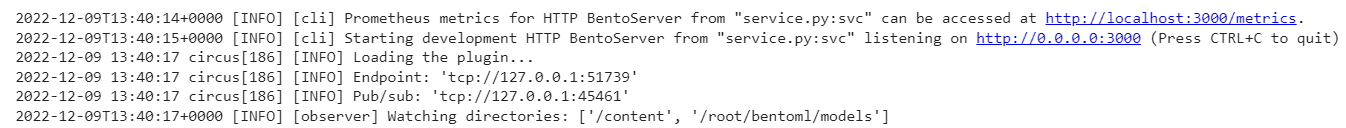

!bentoml serve service.py:svc --reload

Output

Image by Author

The output would show the current log of the development server and where we could access it. If we are satisfied with the development result, we could move on to production. I recommend you refer to the documentation for the production process.

5. Streamlit

Streamlit is an open-source Python package to create a custom web app for data scientists. The package provides insightful code to build and customize various data apps. Let’s try the package to learn how it works.

Install

pip install streamlit

Streamlit web app is running by executing the Python script using the streamlit. That is why we need to prepare the script before running it using the streamlit command before running it. We can run the next sample using your favorite IDE or Jupyter Notebook, but I would show how we create the web app with Streamlit in our Jupyter Notebook.

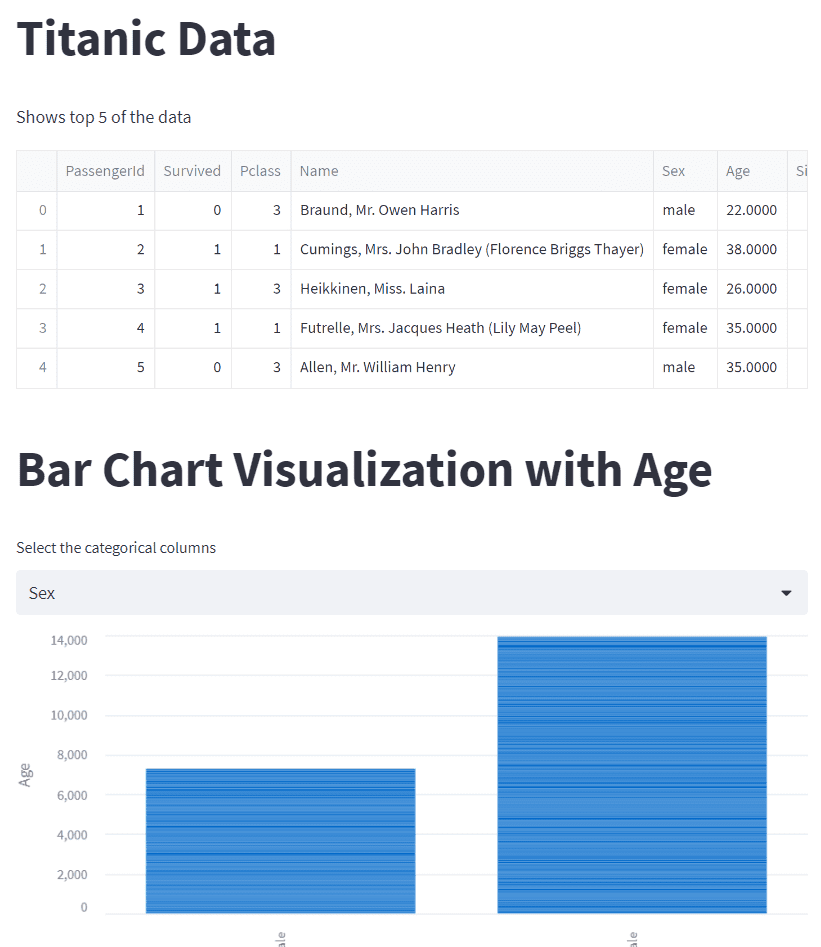

Code Example

%%writefile streamlit_example.py

import streamlit as st

import pandas as pd

import numpy as np

st.title('Titanic Data')

data = pd.read_csv('train.csv')

st.write('Shows top 5 of the data')

st.dataframe(data.head())

st.title('Bar Chart Visualization with Age')

col = st.selectbox('Select the categorical columns', data.select_dtypes('object').columns)

st.bar_chart(data, x = col, y='Age')

The above code would create a script called streamlit_example.py and create a web app similar to the output below if we run the Streamlit command.

!streamlit run streamlit_example.py

Image by Author

The code is easy to learn and would take no time at all for you to create your web app with Streamlit. You could refer to the documentation if you want to know more about what you could create with the Streamlit package.

Conclusion

Facing the year 2023, we should improve our data skillset better than in 2022. What better way to add our data arsenal than by learning from amazing Python packages that would help enhance our data workflow. These top Python packages are

- Pyjanitor

- Pingouin

- PyCaret

- BentoML

- Streamlit

Cornellius Yudha Wijaya is a data science assistant manager and data writer. While working full-time at Allianz Indonesia, he loves to share Python and Data tips via social media and writing media.