Reading Minds with AI: Researchers Translate Brain Waves to Images

Two researchers from Osaka University were able to reconstruct highly accurate images from human brain activity obtained by fMRI. Read this article if you are curious to find out what all the hype is about.

Image by Editor

Introduction

Imagine reliving your memories or constructing images of what someone is thinking. It sounds like something straight out of a science fiction movie but with the recent advancements in computer vision and deep learning, it is becoming a reality. Though neuroscientists still struggle to truly demystify how the human brain converts what our eyes see into mental images, it seems like AI is getting better at this task. Two researchers, from the Graduate School of Frontier Biosciences at Osaka University, proposed a new method using an LDM named Stable Diffusion that accurately reconstructed the images from human brain activity that was obtained by functional magnetic resonance imaging (fMRI). Although the paper “High-resolution image reconstruction with latent diffusion models from human brain activity“ by Yu Takagi and Shinji Nishimotois is not yet peer-reviewed, it has taken the internet by storm as the results are shockingly accurate.

This technology has the potential to revolutionize fields like psychology, neuroscience, and even the criminal justice system. Imagine a suspect is questioned about where he was during the time of the murder and he answers that he was home. But the reconstructed image shows him at the crime scene. Pretty Interesting right? So how does it exactly work? Let's dig deeper into this research paper, its limitations, and its future scope.

How does it Work?

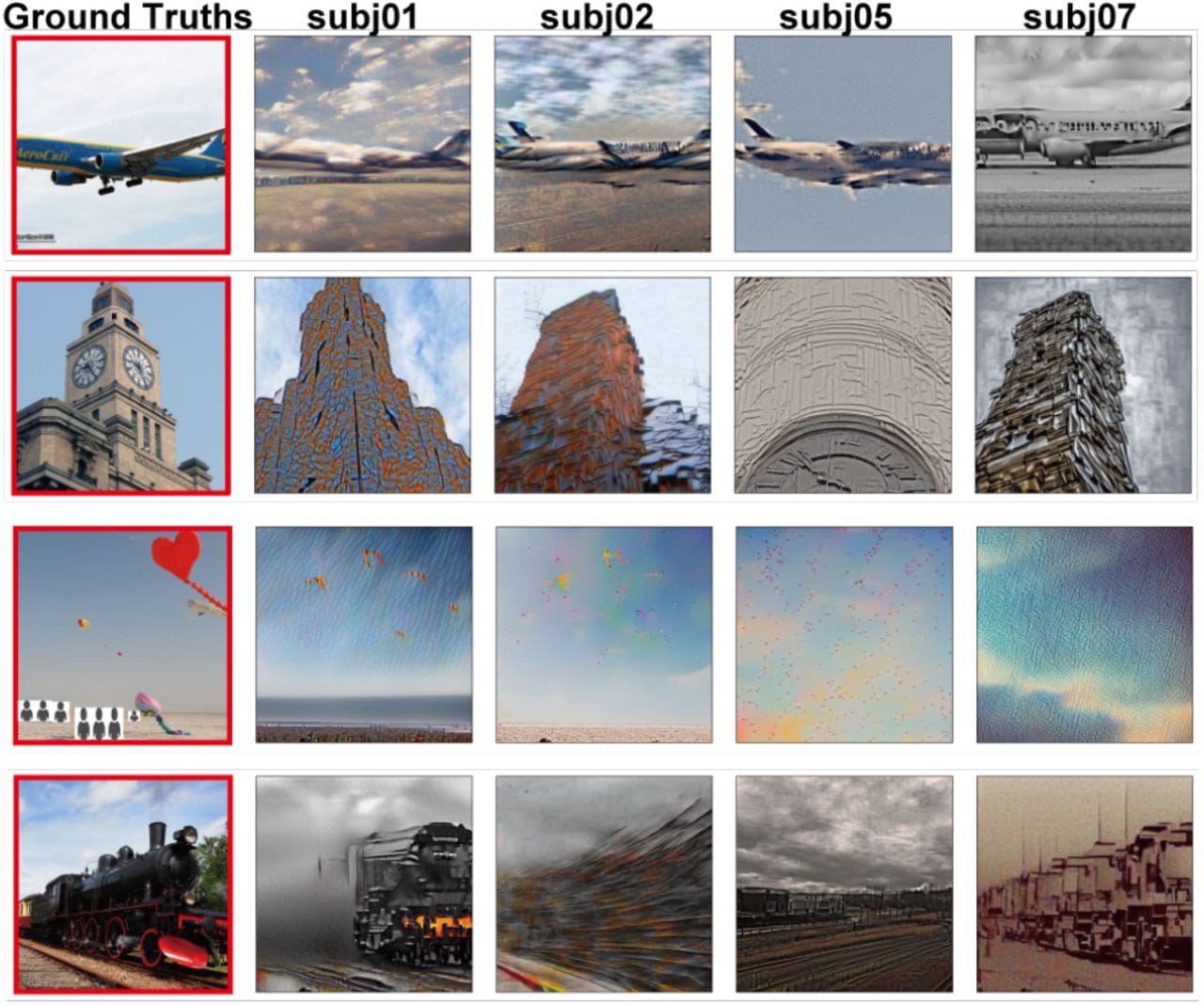

The researchers used the Natural Scenes Dataset ( NSD) provided by the University of Minnesota. It contained data obtained from fMRI scans of four subjects who had looked at 10,000 different images. A subset of 982 images viewed by all four subjects was used as the test dataset. Two different AI models were trained during the process. One was used to link the brain activity with the fMRI images while the other one was used to link it with the text descriptions of images that the subjects looked at. Together, these models allowed the Stable Diffusion to turn the fMRI data into relatively accurate imitations of the images that were not part of its training achieving an accuracy of almost 80%.

Original Images (left) and AI-Generated Images for all Four Subjects

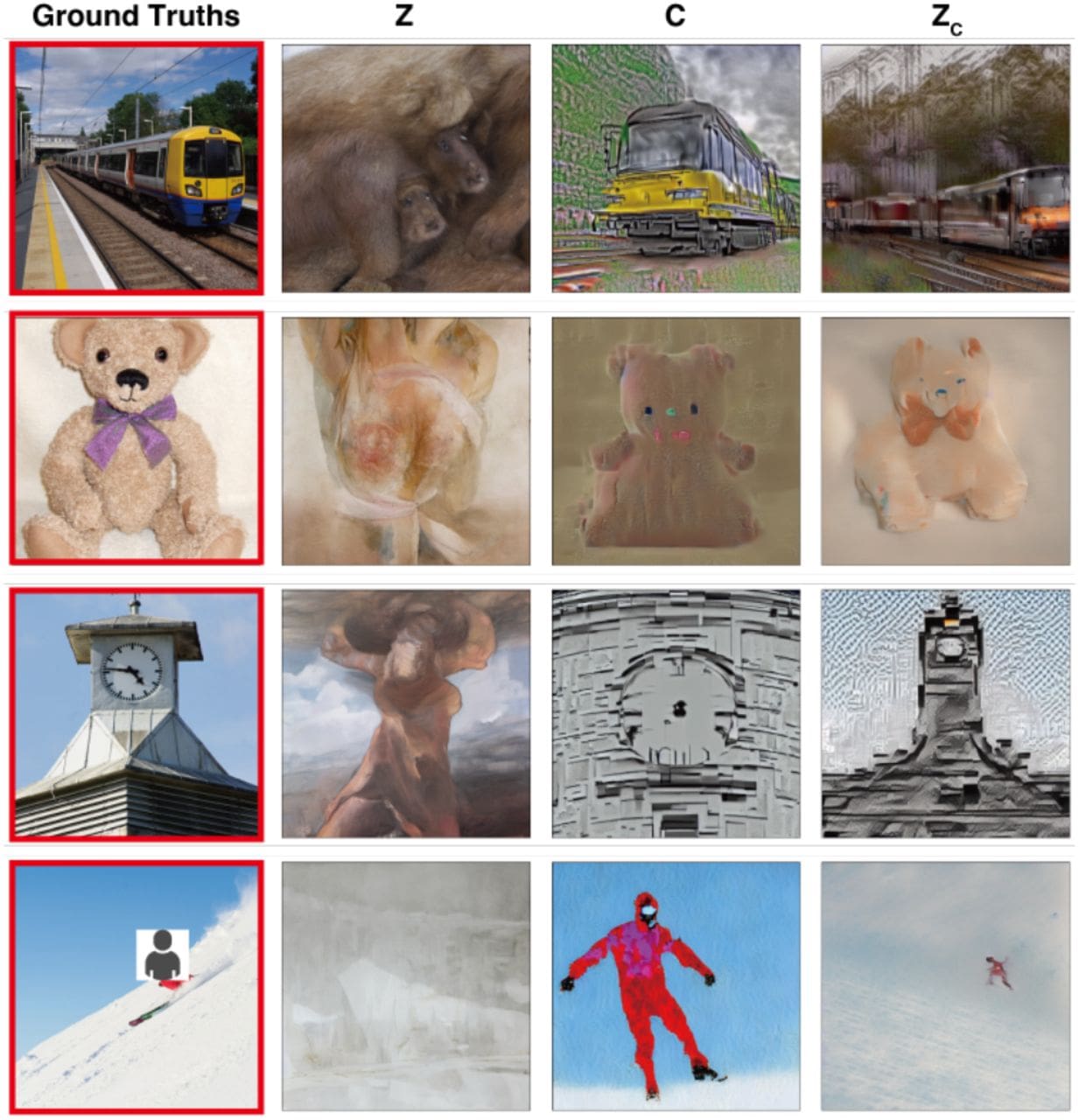

The first model was able to effectively regenerate the layout and the perspective of the image being viewed. But the model struggled with specific objects such as the clock tower and It created abstract and cloudy figures. Instead of using large datasets to predict more details, researchers used the second AI model to associate the keywords from image captions to fMRI scans. For example, if the training data has a picture of a clock tower then the system associates the pattern of brain activity with that object. During the testing stage, if a similar brain pattern was exhibited by the subject participant then the System feds the objects keyword into Stable Diffusion’s normal text-to-image generator that resulted in the convincing imitation of the real image.

(leftmost) Photos seen by study participants, (2nd) Layout and Perspective using patterns of brain activity alone, (3rd) Image by textual Information alone, (rightmost) Addition of textual information and patterns of brain activity to re-create the object in the photo

In this paper, the researchers also claimed that the study was the first of its kind where each component of LDM (Stable diffusion) was quantitatively interpreted from a neuroscience perspective. They did so by mapping the specific components to the distinct regions of the brain. Although the proposed model is still in the nascent stage yet people were quick to react to this paper and termed the model as the next mind reader.

Limitations and Future Scope

Although the accuracy of this model is quite impressive, it was tested on the brain scan of the people who provided the training brain scans. Using the same data for training and test sets can lead to overfitting. However, we should not shrug off this paper as such publications attract researchers and we start seeing related papers with incremental improvements.

Considering the improvements in the field of computer vision, this paper makes me think: Will we be able to relive our dreams soon? Pretty cool and scary at the same time. Although it's quite intriguing, it raises some ethical concerns about the invasion of privacy. Also, there is still a long way to go before we can actually create the subjective experience of a dream. The model is not yet practical for daily use but we are getting closer to understanding how our brain functions. Such technology can also bring about tremendous advancements in the medical field, especially for people who have communication impairments.

Conclusion

If the refinement of the proposed model comes into the picture, this can be the NEXT BREAKTHROUGH in the world of AI. But the benefits and risks must be weighed before the widespread implementation of any technology. I hope you enjoyed reading this article and I would love to hear your thoughts about this amazing research paper.

Kanwal Mehreen is an aspiring software developer with a keen interest in data science and applications of AI in medicine. Kanwal was selected as the Google Generation Scholar 2022 for the APAC region. Kanwal loves to share technical knowledge by writing articles on trending topics, and is passionate about improving the representation of women in tech industry.