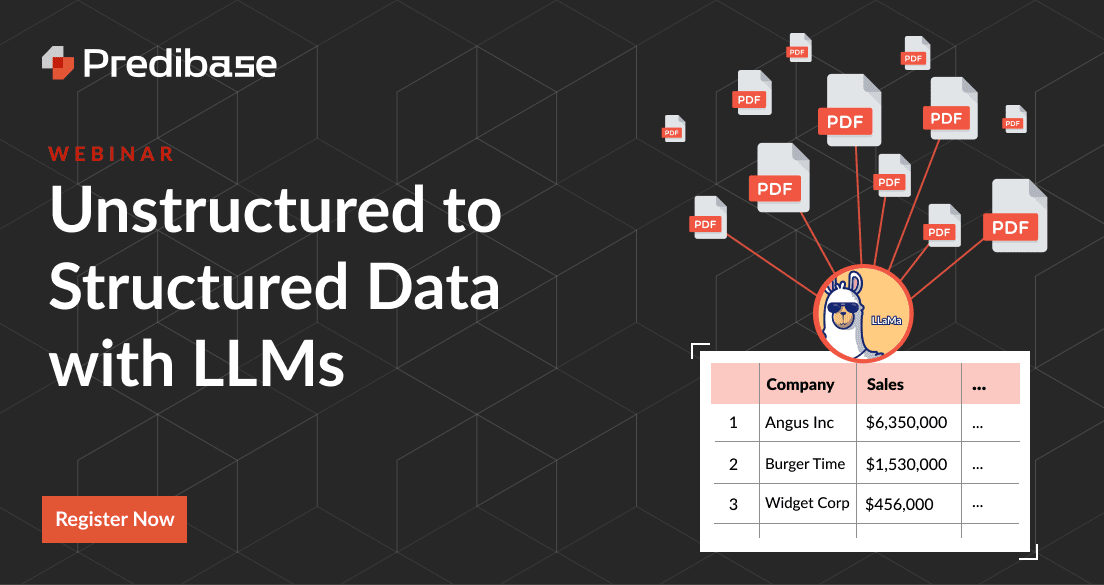

From Unstructured to Structured Data with LLMs

Learn how to use large language models to extract insights from documents for analytics and ML at scale. Join this webinar and live tutorial to learn how to get started.

Authors: Michael Ortega and Geoffrey Angus

Make sure to register for our upcoming webinar to learn how to use large language models to extract insights from unstructured documents.

Thanks to ChatGPT, chat interfaces are how most users have interacted with LLMs. While this is fast, intuitive, and fun for a wide range of generative use cases (e.g. ChatGPT write me a joke about how many engineers it takes to write a blog), there are fundamental limitations to this interface that keep them from going into production.

- Slow - chat interfaces are optimized to provide a low-latency experience. Such optimizations often come at the expense of throughput, making them unviable for large-scale analytics use cases.

- Imprecise - even after days of dedicated prompt iteration, LLMs are often prone to providing verbose responses to simple questions. While such responses are sometimes more human-intelligible in chat-like interactions, they are oftentimes more difficult to parse and consume in broader software ecosystems.

- Limited support for analytics- even when connected to your private data (via an embedding index or otherwise), most LLMs deployed for chat simply cannot ingest all of the context required for many classes of questions typically asked by data analysts.

The reality is that many of these LLM-powered search and Q&A systems are not optimized for large-scale production-grade analytics use cases.

The right approach: Generate structured insights from unstructured data with LLMs

Imagine you’re a portfolio manager with a large number of financial documents. You want to ask the following question, “Of these 10 prospective investments, provide the highest revenue achieved by each company between the years 2000 to 2023?” An LLM out-of-the-box, even with an index retrieval system connected to your private data, would struggle to answer this question due to the volume of context required.

Fortunately, there’s a better way. You can answer questions over your entire corpus faster by first using an LLM to convert your unstructured documents into structured tables via a single large batch job. Using this approach, the financial institution from our hypothetical above could generate structured data in a table from a large set of financial PDFs using a defined schema. Then, quickly produce key statistics on their portfolio in ways that a chat-based LLM would struggle.

Even further, you could build net-new tabular ML models on top of the derived structured data for downstream data science tasks (e.g. based on these 10 risk factors which company is most likely to default). This smaller, task-specific ML model using the derived structured data would perform better and cost less to run compared to a chat-based LLM.

Learn how to extract structured insights from your documents with LLMs

Want to learn how to put this approach into practice using state-of-the-art AI tools designed for developers? Join our upcoming webinar and live demo to learn how to:

- Define a schema of data to extract from a large corpus of PDFs

- Customize and use open-source LLMs to construct new tables with source citations

- Visualize and run predictive analytics on your extracted data

You’ll have a chance to ask your questions live during our Q&A.