Go from Engineer to ML Engineer with Declarative ML

Learn how to easily build any AI model and customize your own LLM in just a few lines of code with a declarative approach to machine learning.

Every company is becoming an AI company and engineers are on the front lines of helping their organizations make this transition. In order to enhance their products, engineering teams are increasingly being asked to incorporate machine learning into their product roadmaps and monthly OKRs. This can be anything from implementing personalized experiences and fraud detection systems to most recently, natural language interfaces powered by large language models.

The AI dilemma for engineering teams

Despite the promise of ML and the growing list of roadmap items, most product engineering teams face a few key challenges when building AI applications:

- Lack of adequate data science resources to help them rapidly develop custom ML models in-house,

- Existing low-level ML frameworks are too complex to rapidly adopt—writing hundreds of lines of TensorFlow code for a classification task is not a small feat for someone new to machine learning,

- Training distributed ML pipelines requires deep knowledge of infrastructure and can take months to train and deploy models.

As a result, engineering teams remain roadblocked on their AI initiatives. Q1’s objective becomes Q2’s and ultimately ships in Q3.

Unblocking engineers with declarative ML

A new generation of declarative machine learning tools—first pioneered at Uber, Apple, and Meta—aim to change this dynamic by making AI accessible to engineering teams (and anyone that is ML-curious for that matter). Declarative ML systems simplify model building and customization with a config-driven approach rooted in engineering best practices, similar to the way that Kubernetes revolutionized managing infrastructure.

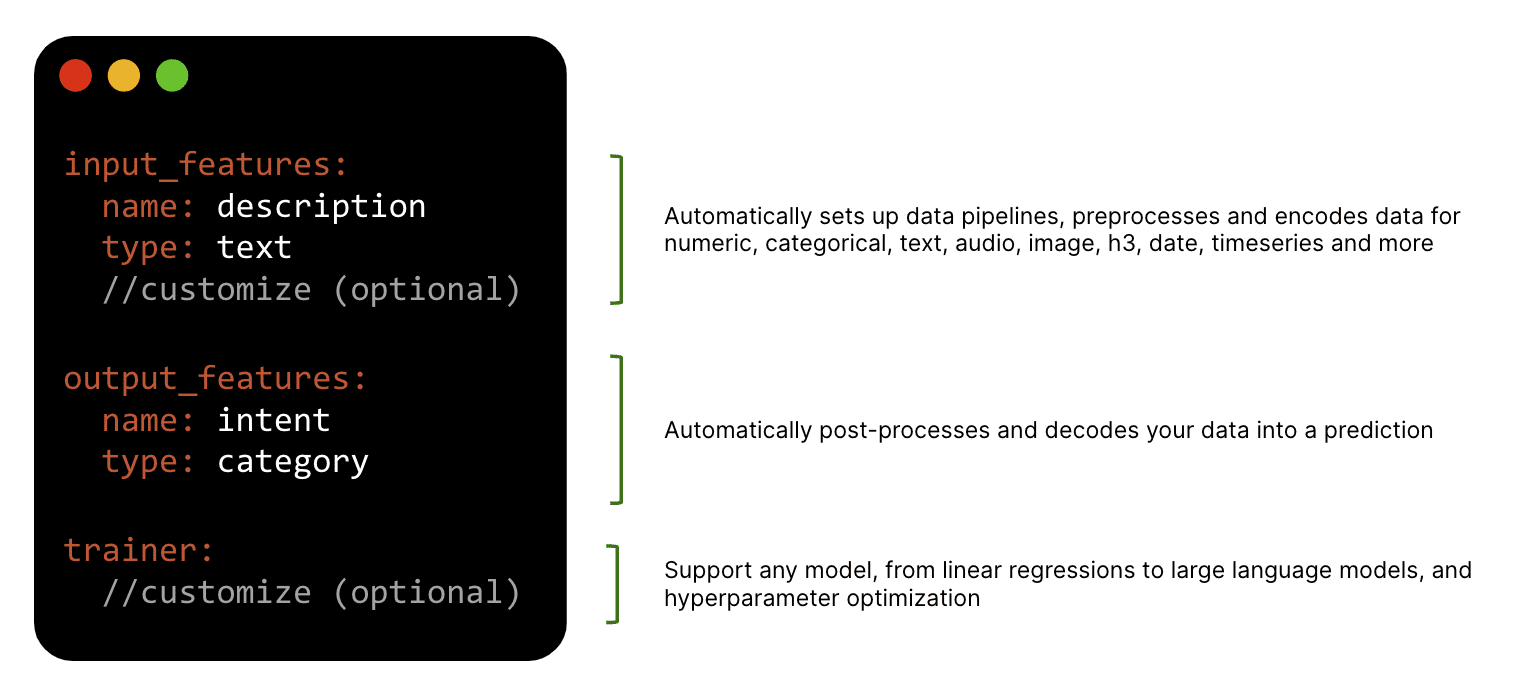

Instead of writing hundreds of lines of low-level ML code, you simply specify your model inputs (features) and outputs (values you want to predict) in a YAML file and the framework provides a recommended and easy-to-customize ML pipeline. With these capabilities, developers can build powerful production-grade AI systems for practical applications in minutes. Ludwig, originally developed at Uber, is the most popular open-source Declarative ML framework with over 9,000 stars in Git.

Start building AI applications the easy way with Declarative ML

Join our upcoming webinar and live demo to learn how you can get started with declarative ML with open-source Ludwig and a free trial of Predibase. During this session you will learn:

- About declarative ML systems, incl. open-source Ludwig from Uber

- How to build and customize ML models and LLMs for any use case in less than 15 lines of YAML

- How to rapidly train, iterate, and deploy a multimodal model for bot detection with Ludwig and Predibase, and get access to our free trial!