Machine Learning is Not Like Your Brain Part Seven: What Neurons are Good At

Thus far, this series has focused on things that Machine Learning does or needs which biological neurons simply can’t do. This article turns the tables and discusses a few things that neurons are particularly good at.

In my undergraduate days, telephone switching was transitioning from electromechanical relays to transistors, so there were a lot of cast-off telephone relays available. Along with some of my cohorts at Electrical Engineering, we built a computer out of telephone relays. The relays we used had a switching delay of 12ms -- that is, when you put power to the relay, the contacts would close 12ms later. Interestingly, this is in the same timing range as the 4ms maximum firing rate of neurons.

We also acquired a teletype machine which used a serial link running at 110 baud or about 9ms per bit. The question this raised was how do you get 12ms relays to output a serial bit every 9ms? The answer sheds some light on how your brain works, how it is different from ML, and why 65% of your brain is devoted to muscular coordination while less than 20% is devoted to thinking.

The answer is that you can make almost any device (electronic or neural) run slower than its maximum speed. By selectively slowing relays, we could produce signals at any rate we chose, limited only by the noise level in the system. For example, you can have one relay (neuron) start a data bit while a slightly slower one ends it. But it takes a lot of relays or neurons.

One can easily see the evolutionary advantage of a mental control system which can make your body perform as quickly as possible. Our brains contain a massive cerebellum to do just that. So the reason so many neurons are dedicated to muscular coordination is that they really aren’t fast enough to do the job and your brain needs a lot of them to compensate.

Here are some other things that neurons are good at:

- Acting as a frequency divider;

- Detecting which of two spikes arrive first with a great deal of precision;

- Detecting which of two spiking neurons is spiking faster;

- Acting as a Frequency Detector; and

- Acting as a short-term memory device.

Frequency Divider

By connecting a source neuron to a target through a synapse weight of 0.5, the target neuron will fire at half the rate of the source. In fact, it will divide by two for any weight between 0.5 and 1 so the precise synapse weight is not critical. For a weight of .2, it’s a divide-by-five system. Although neurons make great frequency dividers, they are not so good a frequency multiplication. A neuron firing a burst of 2 spikes every time it fires is sort of a times-two multiplier but there is no easy way to convert from a signal spiking every 20ms to one spiking every 10.

First-arrival Detector

This is important for directional hearing. If a sound source is directly in front of you (or behind), the sound will arrive at your two ears simultaneously. If it is directly beside you it will arrive at the nearer ear about 0.5ms sooner than the other ear. Studies show you can identify sound direction with arrival differences as low as 10 microseconds. How? Let’s say a target neuron is connected to two sources, one with a weight of 1 and the other with a weight of -1. If the 1 arrives first, the target fires. If the -1 arrives first, even by a small amount, it does not. Now, small differences in axon length between the ear and the detector can create minute delays which can be used to detect the sound direction quite precisely.

Which is Faster

In general, optic neurons fire faster with brighter light. To detect a boundary between a lighter and a darker area, your neurons need to detect small differences in spiking frequency. With a circuit similar to the previous, a pair of neurons can be set to spike, one if one side of a boundary is brighter, and vice versa. It turns out that you can detect boundaries with much greater precision than you can sense absolute brightness or color intensity, and this is a source for numerous optical illusions and difficulties in computer vision.

Frequency Sensor

I haven’t discussed this in detail, but the neuron model is more biologically accurate if we add charge leakage. When a synapse imparts a charge to a target neuron, it doesn’t stay there forever but leaks away. This effect can be used in a number of ways. If you have a source connected to a target with a weight of 0.9, it takes two incoming spikes to make the target fire., but only if the second spike arrives before the charge has leaked to 0.1 or less. In this way, any neuron acts as a frequency sensor, firing whenever the source is spiking at or above a specific frequency which is governed by the leak rate and the synapse weight. A cluster of neurons driven by a single signal can detect the frequency of an incoming signal with considerable precision, but it takes a separate neuron for each frequency level which is to be detected.

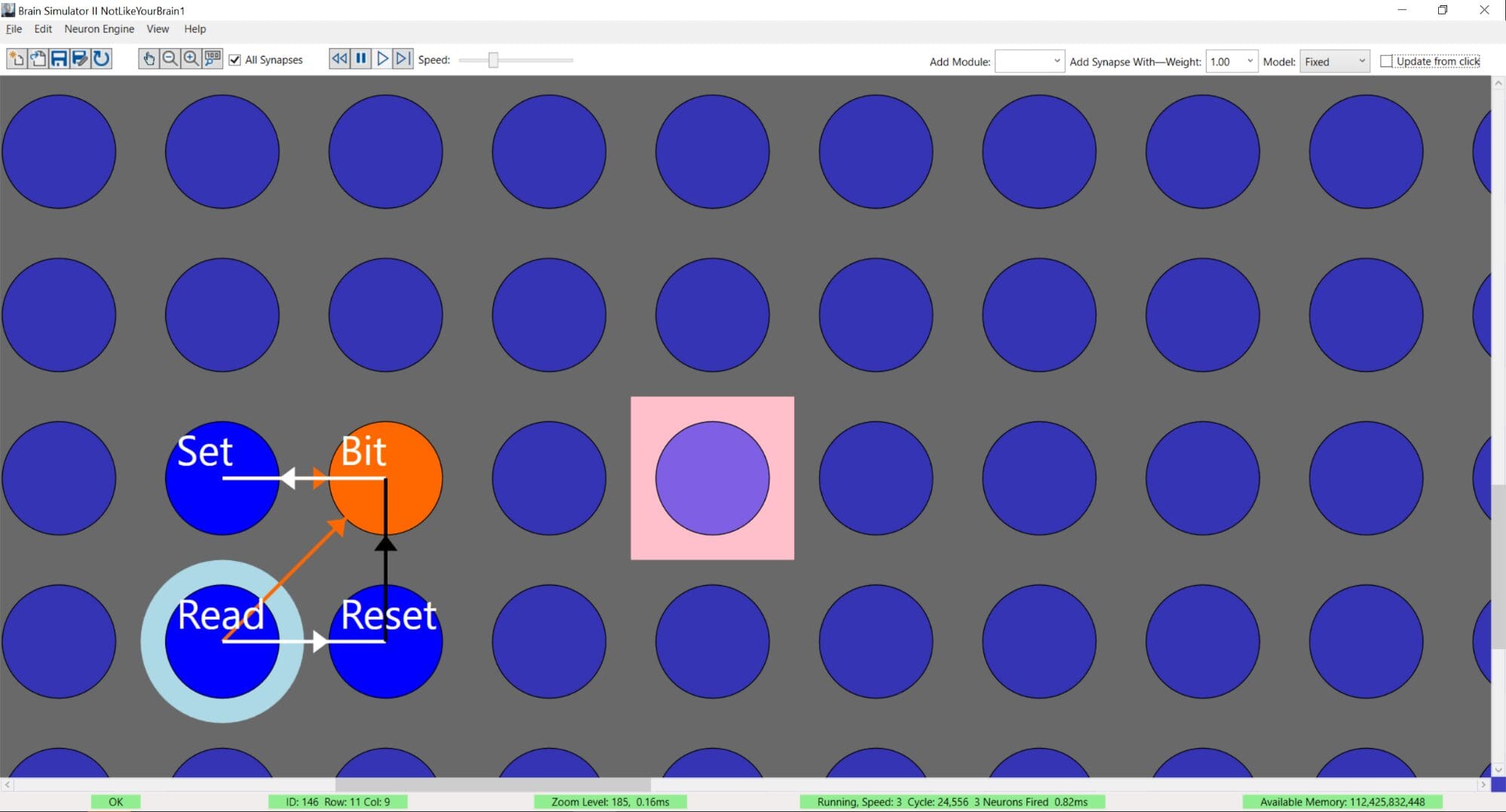

Short-term Memory

The effect described above can be harnessed for short-term memory to store a single bit of information in a neuron, but it must be read back before it leaks away. We’ll define the memory content of the neuron as being 1 whenever the charge is 0.1 or greater. We write the memory bit with a weight of 0.9 and can read it back any time before it leaks below 0.1. The circuit shown in the figure will refresh the memory content whenever the bit is read, so it could potentially be stored indefinitely. This is using a mechanism very similar to dynamic RAM, which also must be refreshed periodically before the memory content leaks away.

This article has covered some of the things neurons can do which are largely outside the scope of machine learning. In the next article, the neuron’s greatest advantage over machine learning-- its tremendous efficiency—will be explored.

A video demonstrating the examples in this article is here: https://youtu.be/gq9H6APDgRM

This neural circuit stores a single bit of information in a short-term memory which can leak away. It uses a neuron labeled “Bit” to store the data bit in its charge level. Firing the “Set” neuron sets a charge in the Bit neuron which can be cleared by the “Reset” neuron. When “Read” fires, Bit will fire or not depending on its charge level. If it fires, it will also fire Set to store the value in the Bit once again. A Set and Bit neuron is needed for each bit while the Read and Reset neurons can be common to any number of bits.

Charles Simon is a nationally recognized entrepreneur and software developer, and the CEO of FutureAI. Simon is the author of Will the Computers Revolt?: Preparing for the Future of Artificial Intelligence, and the developer of Brain Simulator II, an AGI research software platform. For more information, visit here.