Machine Learning Is Not Like Your Brain Part One: Neurons Are Slow, Slow, Slow

Artificial intelligence is not all that intelligent. While today’s AI can do some extraordinary things, the functionality underlying its accomplishments has very little to do with the way in which a human brain works to achieve the same tasks.

A biological brain understands that physical objects exist in a 3D environment which is subject to various physical attributes. It interprets everything in the context of everything else it has previously learned. In stark contrast, AI – and specifically machine learning (ML) – analyzes massive data sets looking for patterns and correlations without understanding any of the data it is processing. An ML system requiring thousands of tagged samples is fundamentally different from the mind of a child, which can learn from just a few experiences of untagged data. Even the recent “neuromorphic” chips rely on capabilities which are absent in biology.

This is just the tip of the iceberg. The differences between ML and the biological brain run much deeper, so for today’s AI to overcome its inherent limitations and evolve into its next phase – artificial general intelligence (AGI) – we should examine the differences between the brain, which already implements general intelligence, and the artificial counterparts.

With that in mind, this nine-part series will explain in progressively greater detail the capabilities and limitations of biological neurons and how these relate to ML. Doing so will shed light on what ultimately will be needed to replicate the contextual understanding of the human brain, enabling AI to attain true intelligence and understanding.

To understand why machine learning is not much like your brain, you need to know a little about how brains work and about their primary component, the neuron. Neuron cells are complex, electro-chemical devices and this brief description just scratches the surface. I will be as brief and succinct as possible, so bear with me then we’ll get back to ML.

The neuron has a cell body and a long axon (avg. length ~10mm) which connects via synapses to other neurons. Each neuron accumulates an internal charge (the “membrane potential”) which can change. If it reaches a threshold, the neuron will “fire.”

The neuron’s internal charge can be modified through the addition of neurotransmitter ions which can increase or reduce the internal charge (depending on the neurotransmitter ion’s charge). These neurotransmitters are contributed from incoming synapses when the connected neuron fires in an amount we’ll call the “weight” of each synapse. As the weight changes, the synapse contributes more or fewer ions to the target neuron’s internal charge. When the neuron fires, it sends a neural spike down its axon and contributes neurotransmitters through each synapse to each connected neuron. After firing, the voltage returns to its resting state and the neurotransmitters return to their initial locations to be reused.

This is significantly different than the process used by the theoretical perceptron which underlies most ML systems. (I’ll explore these differences in a subsequent article.) Perceptrons are great for some calculations, while neurons excel at others.

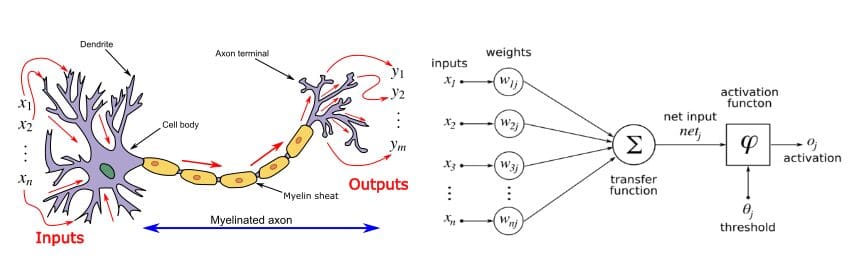

(Left) Diagram of a neuron showing “Inputs” and “Outputs” which are synapses and may count many thousands. The myelin sheath on the axon is only present on long axons which may be 100mm long in the neocortex, so this drawing is not to scale by several orders of magnitude. Shorter axons in the brain are not myelinated, which makes them slower but more densely packed and still often hundreds of times longer than the cell body diameter. Diagram by Egm4313.s12 at English Wikipedia / CC BY-S

(Right) Representation of a Perceptron showing many weighted inputs and a single output which connects to many other similar units. Because it is a mathematical construct, the perceptron’s performance is only limited by the hardware implementing it. Diagram by Wikimedia Commons, CC BY-SA-3.0.

Neurons are slow, slow, slow, with a maximum firing rate of about 250 Hz. (not KHz, MHz or GHz; Hz!), or a maximum of one spike every 4ms. In comparison, transistors are many millions of times faster. The neural spike is about 1ms long, but during the 3ms period after a spike where everything is being reset, the neuron cannot fire again and incoming neural spikes are ignored. This will prove significant when we consider one principal difference between the neuron and the perceptron: the time of arrival of signals at the perceptron does not matter, while the timing of signals in the biological neuron is vitally important.

The reason neurons are so slow is that they are not electronic, but electro-chemical. They rely on transporting or reorienting ionic molecules, which is much slower than electronic signals. Neuron spike signals travel along axons from one neuron to the next at a leisurely 1-2m/s, slightly more than walking speed when compared with electronic signals, which travel at nearly the speed of light.

Getting back to ML, if we imagine that it takes 10 neural pulses to establish a firing frequency (more on this in the next article), now it takes 40 ms to represent a value. A network with 10 layers takes nearly half a second for any signal to propagate from the first layer to the last. Taking into account additional layers for visual basics like boundary-detection, the brain must be limited to fewer than 10 stages of processing.

The neuron’s slowness also means that the approach of learning through many presentations of thousands of training samples is implausible. If a biological brain can process 1 image per second, the 60,000 images of the MNIST dataset of handwritten digits would take 1,000 minutes or 16 hours of dedicated concentration. How long would it take you to learn these symbols? Ten minutes? Obviously, something really different is going on here.

Charles Simon is a nationally recognized entrepreneur and software developer, and the CEO of FutureAI. Simon is the author of Will the Computers Revolt?: Preparing for the Future of Artificial Intelligence, and the developer of Brain Simulator II, an AGI research software platform. For more information, visit here.