Learn About Large Language Models

An introduction to Large Language Models, what they are, how they work, and use cases.

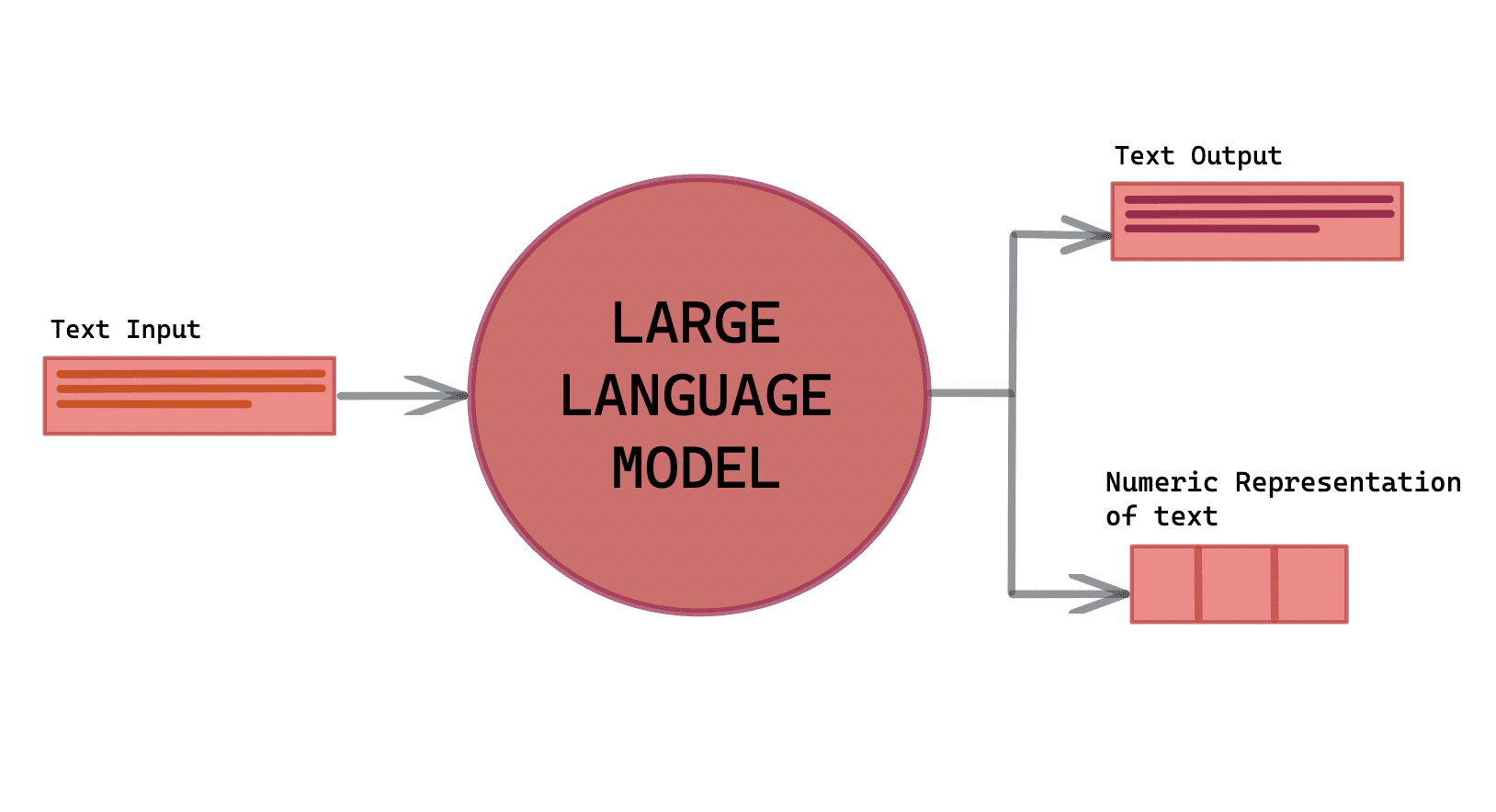

Image by Author

With the announcement of ChatGPT and Google Bard, more and more people are speaking about Large Language Models. It’s the new wave, and everybody wants to know more about it.

Language is an essential element of our everyday lives. It’s how we have grown to learn about our world, succeed in our careers, and shape our future. It is found in the news, the web, laws, messaging services, and more. It is how we connect and communicate with people.

Now with the rapid evolution of technology, more and more companies are finding new ways to develop a computer's ability to learn and deal with language. This comes with the recent breakthrough of language processing technologies in artificial intelligence (AI).

These language-processing techniques are allowing companies to build intelligent systems that have a better understanding of language. These techniques are made from large pre-trained transformer language models or large language models - which have a more extensive ability and understanding of language and what these models need to do with the text.

What are Large Language Models?

Looking at AI applications such as ChatGPT and Google Bard - they have the ability to summarise articles, help you write stories/comics, and engage in long human-like conversations. They can do a lot and this is all because of the large language models embedded.

A Large Language Model (LLM) is a deep-learning algorithm that can read, recognise, summarise, translate, predict and also generate text. Their ability to predict future words and construct a sentence allows them to learn how humans talk and write and be able to have conversations - just like humans!

Large language models are types of transformer models, and they are among the most successful applications of transformer models currently.

Transformer Models

Transformer models are neural networks that learn context and track the relationships in sequential data, for example, words in a sentence. Transformer models use mathematical techniques called attention or self-attention. These are used to detect

Transformer models use mathematical techniques, known as attention or self-attention. Attention is when a transformer model attends to different parts of another sequence, whereas self-attention is when a transformer model attends to different parts of the same input sequence.

Transformer models have the ability to translate text and speech in real-time, along with catering to meetings that are made up of a diverse group and include hearing-impaired attendees.

How do Large Language Models work?

In order to create a successful performing LLM, you will need large amounts of data. We can gauge this from the ‘Large’ in its name.

Let’s take ChatGPT for example, the model needs to have enough data in order to understand everything you are asking. It takes all the data and text that is available on the internet over a large period. Allowing it to produce accurate and effective outputs for the user.

The algorithm uses unsupervised learning, where the model learns on unlabeled data, inferring more about the hidden structures to produce accurate and reliable outputs. Using this type of machine learning technique, the LLM learns about words, the construction of sentences, and the relationship between the words. This helps the LLM to learn more about language, context, grammar, and tone of language.

Through this, the LLM can use this knowledge to predict and generate content.

Other techniques can be applied to LLMs to be used in specific tasks. For example, a model can be fine-tuned to be used for educational purposes around the history of the UK. Where the model will be fed small bits of data that focus on the history of the UK and train it for a specific application.

Where are Large Language Models used?

We have recently seen the success of LLMs with AI applications, such as GPT. More and more people will want to find new ways to unlock new opportunities and be innovative with LLM’s in areas such as code generation, healthcare, robotics, and more.

Some current applications of LLMs include:

- Customer service: For example South Korea’s most popular AI voice assistant, GiGA Genie

- Finance: For example Applica with their use of virtual assistants

- Search engines: For example Google AI Bard

- Life Science Research: For example NVIDIA BioNeMo

Wrapping it up

I know a lot of you have been hearing, seeing, and probably using ChatGPT or Google Bard. It’s good to know how these AI applications can perform these tasks. I hope this was a good introduction to Large Language Models (LLMs).

If you would like to know more about ChatGPT, have a read of these:

- ChatGPT: Everything You Need to Know

- ChatGPT for Beginners

- ChatGPT as a Python Programming Assistant

- The ChatGPT Cheat Sheet

- Top Free Resources To Learn ChatGPT

Nisha Arya is a Data Scientist, Freelance Technical Writer and Community Manager at KDnuggets. She is particularly interested in providing Data Science career advice or tutorials and theory based knowledge around Data Science. She also wishes to explore the different ways Artificial Intelligence is/can benefit the longevity of human life. A keen learner, seeking to broaden her tech knowledge and writing skills, whilst helping guide others.