Hyperparameter Optimization: 10 Top Python Libraries

Become familiar with some of the most popular Python libraries available for hyperparameter optimization.

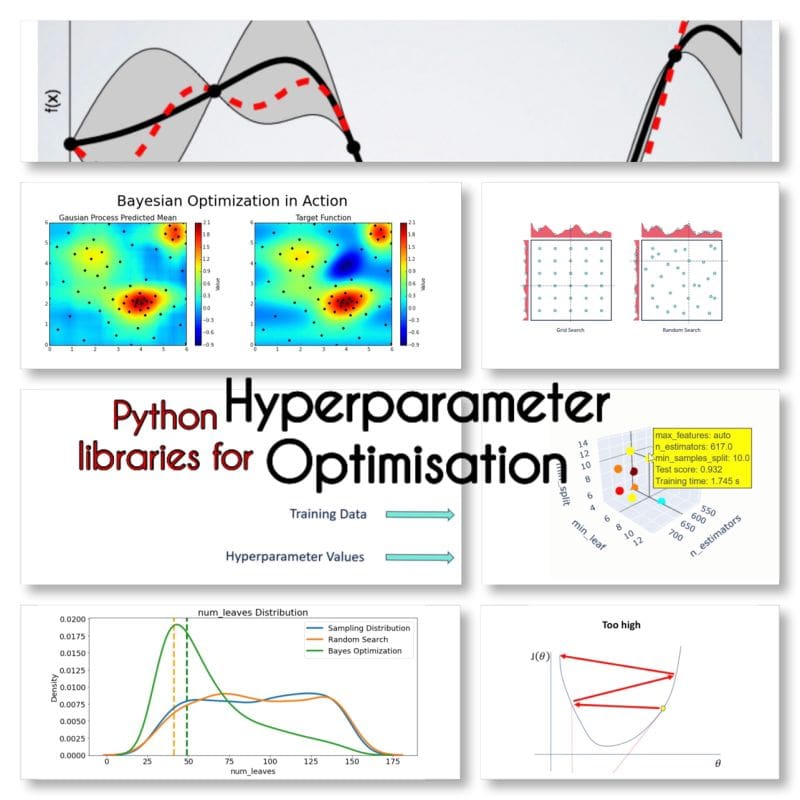

Image by Author

Hyperparameter optimization plays a crucial role in determining the performance of a machine learning model. They are one the 3 components of training.

3 Components of Models

Training data

Training data is what the algorithm leverages (think: instructions to build a model) to identify patterns.

Parameters

Algorithm 'learns' by adjusting parameters, such as weights, based on training data to make accurate predictions, which are saved as part of the final model.

Hyperparameters

Hyperparameters are variables that regulate the process of training and are constant during the training process.

Different Types of Search

Grid Search

Training models with every possible combination of the provided hyperparameter values a time-consuming process.

Random Search

Training models with randomly samples hyperparameter values from the defined distributions, a more effective search.

Having Grid Search

Training models with all values, and then repeatedly "halving" the search space by only considering the parameter values that performed the best in the previous round.

Bayesian Search

Starting with an initial guess of values, using performance of the model to the values. It's like how a detective might start with a list of suspects, then use new information to narrow down the list.

Python Libraries for Hyperparameter Optimization

I found these 10 Python libraries for hyperparameter optimization.

Optuna

You can tune estimators of almost any ML, DL package/framework, including Sklearn, PyTorch, TensorFlow, Keras, XGBoost, LightGBM, CatBoost, etc with a real-time Web Dashboard called optuna-dashboard.

Hyperopt

Optimizing using Bayesian optimization, including conditional dimensions.

Scikit-learn

Different searches such as GridSearchCV or HalvingGridSearchCV.

Auto-Sklearn

AutoML and a drop-in replacement for a scikit-learn estimator.

Hyperactive

Very easy to learn but extremly versatile providing intelligent optimization.

Optunity

Provides distinct approaches such plethora of score functions.

HyperparameterHunter

Automatic save/learn from Experiments for persistent optimization

MLJAR

AutoML creating Markdown reports from ML pipeline

KerasTuner

With Bayesian Optimization, Hyperband, and Random Search algorithms built-in

Talos

Hyperparameter Optimization for TensorFlow, Keras and PyTorch.

Have I forgotten any libraries?

Sources:

Maryam Miradi is an AI and Data Science Lead with a PhD in Machine Learning and Deep learning, specialised in NLP and Computer Vision. She has 15+ years of experience creating successful AI solutions with a track record of delivering over 40 successful projects. She has worked for 12 different organisations in a variety of industries, including Detecting Financial Crime, Energy, Banking, Retail, E-commerce, and Government.