Hydra Configs for Deep Learning Experiments

This brief guide illustrates how to use the Hydra library for ML experiments, especially in the case of deep learning-related tasks, and why you need this tool to make your workflow easier.

Image by Editor

Introduction

Hydra library provides a flexible and efficient configuration management system that enables creating hierarchical configurations dynamically by composition and overriding through config files and the command line.

This powerful tool offers a simple and efficient way to manage and organize various configurations in one place, constructing complex multilevel configs structures without any limits which can be essential in machine learning projects.

All of that enables you to switch easily between any parameters and try different configurations without manually updating the code. By defining the parameters in a flexible and modular way, it becomes much easier to iterate over new ML models and compare different approaches faster, which can save time and resources, and, besides, make the development process more efficient.

Hydra can serve as the central component in deep learning pipelines (you can find an example of my training pipeline template here), which would orchestrate all internal modules.

How to Run a Deep Learning Pipeline with Hydra

A decorator hydra.main is supposed to be used to load Hydra config during launching of the pipeline. Here a config is being parsed by Hydra grammar parser, merged, composed and passed to the pipeline main function.

Also, It could be accomplished by Hydra Compose API, using initialize, initialize_config_module or initialize_config_dir, instead of hydra.main decorator:

Instantiating Objects with Hydra

An object can be instantiated from a config if it includes a _target_ key with class or function name, for example torchmetrics.Accuracy. Also, the config might contain other parameters which should be passed to object instantiation. Hydra provides hydra.utils.instantiate() function (and its alias hydra.utils.call()) for instantiating objects and calling class or function. It is preferable to use instantiate for creating objects and call for invoking functions.

Based on this config you could simply instantiate:

- loss via

loss = hydra.utils.instantiate(config.loss) - metric via

metric = hydra.utils.instantiate(config.metric)

Besides, it supports multiple strategies for converting config parameters: none, partial, object and all. The _convert_ attribute is intended to manage this option. You can find more details here.

Moreover, it offers a partial instantiation, which can be very useful, for example for function instantiation or recursively object instantiation.

Command Line Operations

Hydra provides the command line operations for config parameters overriding:

- Existing config value can be replaced by passing a different value.

- A new config value that does not exist in the config can be added by using the

+operator. - If the config already has a value, it can be overridden by using the

++operator. If the value does not exist in the config, it will be added.

Additional Out-of-the-box Features

It also brings support for various exciting features such as:

- Structured configs with extended list of available primitive types, nested structure, containers with primitives, default values, bottom-up values overriding and so much more. This offers a wide range of possibilities for organizing configs in many different forms.

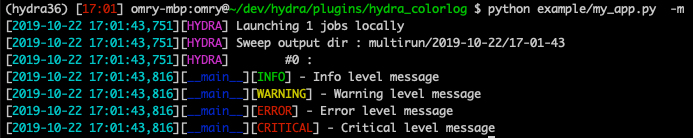

- Coloured logs for

hydra/job_loggingandhydra/hydra_logging.

- Hyperparameters sweepers without any additional code: Optuna, Nevergrad, Ax.

- Custom plugins

Custom Config Resolvers

Hydra provides an opportunity to expand its functionality even more by adding custom resolvers via OmegaConf library. It enables adding custom executable expressions to the config. OmegaConf.register_new_resolver() function is used to register such resolvers.

By default, OmegaConf supports the following resolvers:

oc.env: returns the value of an environment variableoc.create: may be used for dynamic generation of config nodesoc.deprecated: it can be utilised to mark a config node as deprecatedoc.decode: decodes a string using a given codecoc.select: provides a default value to use in case the primary interpolation key is not found or selects keys that are otherwise illegal interpolation keys or handles missing values as welloc.dict.{keys,value}: analogous to the dict.keys and dict.values methods in plain Python dictionaries

See more details here.

Therefore, it is a powerful tool that enables adding any custom resolvers. For instance, it can be tedious and time-consuming to repeatedly write loss or metric names in the configs in multiple places, like early_stopping config, model_checkpoint config, config containing scheduler params, or somewhere else. It could be solved by adding a custom resolver to replace __loss__ and __metric__ names by the actual loss or metric name, which is passed to the config and instantiated by Hydra.

Note: You need to register custom resolvers before hydra.main or Compose API calls. Otherwise, Hydra config parser just doesn’t apply it.

In my template for rapid deep learning experiments, it is implemented as a decorator utils.register_custom_resolvers, which allows to register all custom resolvers in one place. It supports main Hydra’s command line flags, which are required to override config path, name or dir. By default, it allows you to replace __loss__ to loss.__class__.__name__ and __metric__ to metric.__class__.__name__ via such syntax: ${replace:"__metric__/valid"}. Use quotes for defining internal value in ${replace:"..."} to avoid grammar problems with Hydra config parser.

See more details about utils.register_custom_resolvers here. You can easily expand it for any other purposes.

Simplify Complex Modules Configuring

This powerful tool significantly simplifies the development and configuration of complex pipelines, for instance:

- Instantiate modules with any custom logic under the hood, eg:

- Instantiate the whole module with all inside submodules recursively by Hydra.

- Main module and some parts of the inside submodules can be initialized by Hydra and the rest of them manually.

- Manually initialize the main module and all submodules.

- Package dynamic structures, like data augmentations to config, where you can easily set up any

transformsclasses, parameters or appropriate order for applying. See example of possible implementationTransformsWrapperbased on albumentations library for such purpose, which can be easily reworked for any over augmentations package. Config example:

- Create complex multilevel configs structure. Here is an outline of how configs can be organized:

- And many other things... It has very few limitations, so you can use it for any custom logic you want to implement in your project.

Final Thoughts

Hydra paves the way to a scalable configuration management system which gives you a power to expand the efficiency of your workflow and maintain the flexibility to make changes to your configurations as needed. The ability to easily switch between different configurations, simply recompose it and try out different approaches without manually updating the code is a key advantage of using such a system for solving machine learning problems, especially in deep learning related tasks, when additional flexibility is so critical.

Alexander Gorodnitskiy is a machine learning engineer with a deep knowledge of ML, Computer Vision and Analytics. I have 3+ years of experience in creating and improving products using machine learning.