Explainable AI: 10 Python Libraries for Demystifying Your Model’s Decisions

Become familiar with some of the most popular Python libraries available for AI explainability.

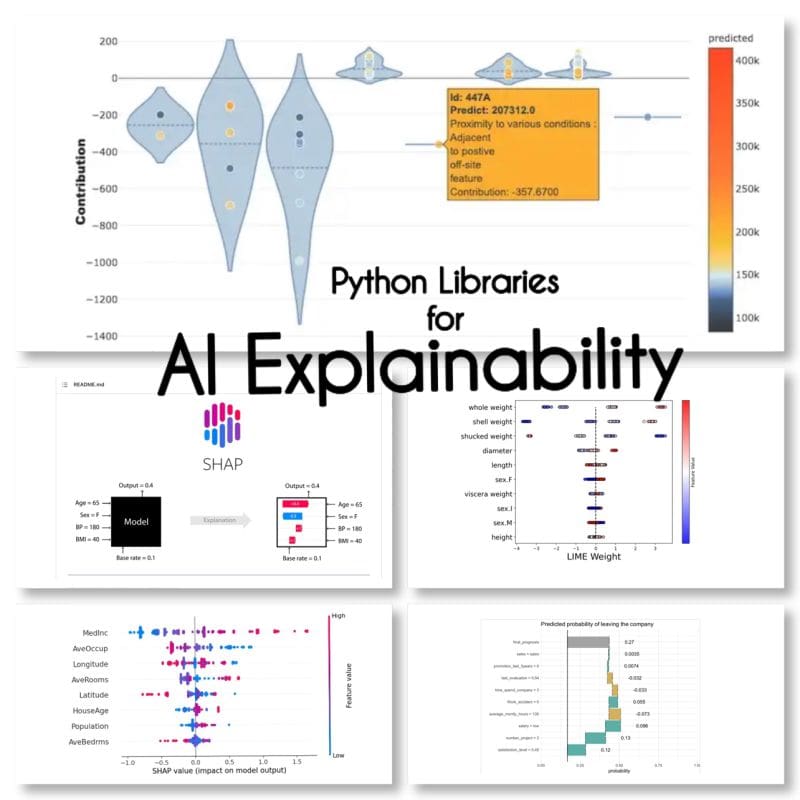

Image by Author

XAI is artificial intelligence that allows humans to understand the results and decision-making processes of the model or system.

The 3 Stages of Explanation

Pre-modeling Explainability

Explainable AI starts with explainable data and clear, interpretable feature engineering.

Modeling Explainability

When choosing a model for a particular problem, it is generally best to use the most interpretable model that still achieves good predictive results.

Post-model Explainability

This includes techniques such as perturbation, where the effect of changing a single variable on the model's output is analyzed such as SHAP values for after training.

Python Libraries for AI Explainability

I found these 10 Python libraries for AI explainability:

SHAP (SHapley Additive exPlanations)

SHAP is a model agnostic and works by breaking down the contribution of each feature and attributing a score to each feature.

LIME (Local Interpretable Model-agnostic Explanations)

LIME is another model agnostic method that works by approximating the behavior of the model locally around a specific prediction.

ELi5

Eli5 is a library for debugging and explaining classifiers. It provides feature importance scores, as well as "reason codes" for scikit-learn, Keras, xgboost, LightGBM, CatBoost.

Shapash

Shapash is a Python library which aims to make machine learning interpretable and understandable to everyone. Shapash provides several types of visualization with explicit labels.

Anchors

Anchors is a method for generating human-interpretable rules that can be used to explain the predictions of a machine learning model.

XAI (eXplainable AI)

XAI is a library for explaining and visualizing the predictions of machine learning models including feature importance scores.

BreakDown

BreakDown is a tool that can be used to explain the predictions of linear models. It works by decomposing the model's output into the contribution of each input feature.

interpret-text

interpret-text is a library for explaining the predictions of natural language processing models.

iml (Interpretable Machine Learning)

iml currently contains the interface and IO code from the Shap project, and it will potentially also do the same for the Lime project.

aix360 (AI Explainability 360)

aix360 includes a comprehensive set of algorithms that cover different dimensions

OmniXAI

OmniXAI (short for Omni eXplainable AI), addresses several problems with interpreting judgments produced by machine learning models in practice.

Have I forgotten any libraries?

Sources

Maryam Miradi is an AI and Data Science Lead with a PhD in Machine Learning and Deep learning, specialised in NLP and Computer Vision. She has 15+ years of experience creating successful AI solutions with a track record of delivering over 40 successful projects. She has worked for 12 different organisations in a variety of industries, including Detecting Financial Crime, Energy, Banking, Retail, E-commerce, and Government.