Data Science for Internet of Things (IoT): Ten Differences From Traditional Data Science

Data Science for Internet of Things (IoT): Ten Differences From Traditional Data Science

The connected devices (The Internet of Things) generate more than 2.5 quintillion bytes of data daily. All this data will significantly impact business processes and the Data Science for IoT will take increasingly central role. Here we outline 10 main differences between Data Science for IoT and traditional Data Science.

Introduction

In the last two decades, more than six billion devices have come online. All those connected "things" (collectively called - The Internet of Things) generate more than 2.5 quintillion bytes of data daily. That's enough to fill 57.5 billion 32 GB iPads per day (source Gartner). All this data is bound to significantly impact many business processes over the next few years. Thus, the concept of IoT Analytics (Data Science for IoT) is expected to drive the business models for IoT. According to Forbes, strong analytics skills are likely to lead to 3x more success with Internet of Things. We cover many of these ideas in the Data Science for IoT course.

Data Science for IoT has similarities but also some significant differences. Here are 10 differences between Data Science for IoT and traditional Data Science.

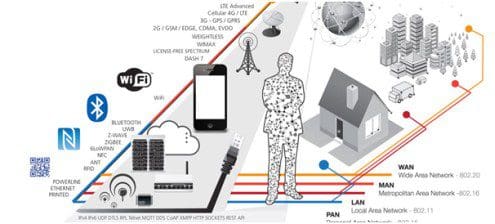

Image source: Postscapes.

- Working with the Hardware and the radio layers

- Edge processing

- Specific analytics models used in IoT verticals

- Deep learning for IoT

- Pre-processing for IoT

- The role of Sensor fusion in IoT

- Real Time processing and IoT

- Privacy, Insurance, and Blockchain for IoT

- AI: Machines teaching each other(cloud robotics)

- IoT and AI layer for the Enterprise

1) Working with the Hardware and the radio layers

This may sound obvious, but it's easy to underestimate. IoT involves working with a range of devices and also a variety of radio technologies. It is a rapidly shifting ecosystem with new technologies like LoRa, LTE-M, Sigfox etc. The deployment of 5G will make a big difference because we would have both Local area and Wide area connectivity. Each of the verticals (we track Smart homes, Retail, Healthcare, Smart cities, Energy, Transportation, Manufacturing and Wearables) also have a specific set of IoT devices and radio technologies. For example, for wearables you see Bluetooth 4.0 in use but for Industrial IoT, you are likely to see cellular technologies which guarantee Quality of Service such as the GE Predix alliance with Verizon

2) Edge processing

In traditional Data Science, Big data usually resides in the Cloud. Not so for IoT!. Many vendors like Cisco and Intel call this as Edge Computing. I have covered the impact of Edge analytics and IoT in detail in a previous post: The evolution of IoT Edge analytics.

3) Specific analytics models used in IoT verticals

IoT needs an emphasis on different models and also these models depend on IoT verticals. In traditional Data Science, we use a variety of algorithms (Top Algorithms Used by Data Scientists). For IoT, time series models are often used. This means : ARIMA, Holt Winters, moving average. The difference is the volume of data but also more sophisticated real time implementations of the same models ex (pdf) : ARIIMA: Real IoT implementation of a machine learning architecture for reducing energy consumption. The use of models vary across IoT verticals. For example in Manufacturing : predictive maintenance, anomaly detection, forecasting and missing event interpolation are common. In Telecoms, traditional models like churn modelling, cross sell, upsell model , customer life time value could include IoT as an input.

4) Deep learning for IoT

If you consider Cameras as sensors, there are many applications of Deep Learning algorithms such as CNNs for security applications, eg from hertasecurity. Reinforcement learning also has applications for IoT as I discussed in a post by Brandon Rohrer for Reinforcement Learning and Internet of Things

5) Pre-processing for IoT

IoT datasets need a different form of Pre-processing. Sibanjan Das and I referred to it in Deep learning - IoT and H2O.

Deep learning algorithms play an important role in IoT analytics. Data from machines is sparse and/or has a temporal element in it. Even when we trust data from a specific device, devices may behave differently at different conditions. Hence, capturing all scenarios for data pre-processing/training stage of an algorithm is difficult. Monitoring sensor data continuously is also cumbersome and expensive. Deep learning algorithms can help to mitigate these risks. Deep Learning algorithms learn on their own allowing the developer to concentrate on better things without worrying about training them.

6) The role of Sensor fusion in IoT

Sensor fusion involves combining of data from disparate sensors and sources such that the resulting information has less uncertainty than would be possible when these sources were used individually. (adapted from Wikipedia). The term 'uncertainty reduction' in this case can mean more accurate, more complete, or more dependable, or refer to the result of an emerging view based on the combined information. Sensor fusion has always played a key role in applications like Aerospace:

In aerospace applications, accelerometers and gyroscopes are often coupled into an Inertial Measurement Unit (IMU), which measures orientation based on a number of sensor inputs, known as Degrees of Freedom (DOF). Inertial Navigation Systems (INS) for spacecraft and aircraft can cost thousands of dollars due to strict accuracy and drift tolerances as well as high reliability.

But increasingly, we are seeing sensor fusion in self driving cars and Drones where inputs from multiple sensors can be combined to infer more about an event.

7) Real Time processing and IoT

IoT involves both fast and big data. Hence, Real Time applications provide a natural synergy with IoT. Many IoT applications like Fleet management, Smart grid, Twitter stream processing etc have unique analytics requirements based on both fast and large data streaming. These include:

- Real time tagging: As unstructured data flows from various sources, the only way to extract signal from noise is to classify the data as it comes. This could involve working with Schema on the fly concepts.

- Real time aggregation: Any time you aggregate and compute data along a sliding time window you are doing real time aggregation: Find a user behaviour logging pattern in the last 5 seconds and compare it to the last 5 years to detect deviation

- Real time temporal correlation: Ex: Identifying emerging events based on location and time, real-time event association from largescale streaming social media data (above adapted from logtrust)

8) Privacy, Insurance and Blockchain for IoT

I once sat through a meeting in the EU where the idea of 'silence of the chips' was proposed. The evocative title is modelled on the movie 'silence of the lambs'. The idea is: when you enter a new environment, you have the right to know every sensor which is monitoring you and to also selectively switch it on or off. This may sound extreme - but it does show the Boolean (On or Off) thinking that dominates much of the Privacy discussion today. However, future IoT discussions for privacy are likely to be much more nuanced - especially so, when Privacy, Insurance and Blockchain are considered together.

IoT is already seen to be a significant opportunity by Insurers according to AT Kearney . Organizations like Lloyds of London are also looking to handle large scale systemic risk by the introduction of new technology driven by sensors in urban areas ex Drones. Introduction of Blockchain to IoT creates other possibilities - like IBM says:

Applying the blockchain concept to the world of [Internet of Things] offers fascinating possibilities. Right from the time a product completes final assembly, it can be registered by the manufacturer into a universal blockchain representing its beginning of life. Once sold, a dealer or end customer can register it to a regional blockchain (a community, city or state).

9) AI: Machines teaching each other (cloud robotics)

We alluded to the possibility of Deep Learning and IoT previously where we said that Deep learning algorithms play an important role in IoT analytics because Machine data is sparse and / or has a temporal element to it. Devices may behave differently at different conditions. Hence, capturing all scenarios for data pre-processing/training stage of an algorithm is difficult. Deep learning algorithms can help to mitigate these risks by enabling algorithms learn on their own. This concept of machines learning on their own can be extended to machines teaching other machines. This idea is not so far-fetched.

Consider Fanuc, the world's largest maker of industrial robots. A Fanuc robot teaches itself to perform a task overnight by observation and through reinforcement learning. Fanuc's robot uses reinforcement learning to train itself. After eight hours or so it gets to 90 percent accuracy or above, which is almost the same as if an expert were to program it. The process can be accelerated if several robots work in parallel and then share what they have learned. This form of distributed learning is called cloud robotics

10) IoT and AI layer for the Enterprise

We can extend the idea of 'machines teaching other machines' more generically within the Enterprise. Enterprises are getting an 'AI layer'. Any entity in an enterprise can train other 'peer' entities in the Enterprise. That could be buildings learning from other buildings - or planes or oil rigs or even Printers! Training could be dynamic and on-going (for example one building learns about energy consumption and 'teaches' the next building). IoT is a key source of Data for the Enterprise IoT system. Currently, the best example of this approach is Salesforce.com and Einstein. Reinforcement learning is the key technology that drives IoT and AI layer for the Enterprise.

"By end of 2016 more than 80 of the world's 100 largest enterprise software companies by revenues will have integrated cognitive technologies into their products." (source Deloitte).

Far from the AI Winter, we seem to be suddenly in the midst of an AI spring. And IoT suddenly has a clear business model in this AI Spring.

Conclusion

From the above discussion, we see many similarities but also significant differences when it comes to Data Science for IoT. There are obvious differences (for example in the use of Hardware and Radio networks). But for me, the most exciting development is the fact that IoT powers exciting new greenfield domains such as Drones, Self driving cars, Enterprise AI, Cloud robotics and many more. We cover many of these ideas in the Data Science for IoT course.

Only a final few places remain with this batch.

Data Science for Internet of Things (IoT): Ten Differences From Traditional Data Science

Data Science for Internet of Things (IoT): Ten Differences From Traditional Data Science