8 Ways to Improve Your Search Application this Week

There are many places to start improving and optimizing and it’s easy to get bogged down. The good news is that there are several easy ways to improve your search application’s quality and performance.

By Daniel Tunkelang and Grant Ingersoll

A search team’s work is never done. Even after you get your search application out the door, the fanfare quickly subsides and you have to figure out the features for your next release. There are many places to start improving and optimizing and it’s easy to get bogged down. The good news is that there are several easy ways to improve your search application’s quality and performance.

Here’s a quick run down of the top eight ways to improve your search application immediately:

Optimize your top 20 queries.

Take a look at the query reports from most search applications, and you’ll see a head-tail distribution: a disproportionate fraction of query volume from a small set of head queries, followed by a long tail of less frequent queries. Your business owners or stakeholders may be tempted to attack tail queries first, since they typically have the lowest performance. But often a better approach is to tackle the result quality of head queries, since improving them delivers more return on your investment.

Run a report of your top 20 most frequent search queries and look at the results for each one.

- Are these the right documents and data to show for each of these queries? Get your subject matter experts to weigh in.

- Look at user behavior: is the top result the one most commonly clicked on? Do users come back and re-run the query again with additional words and then click to a different result? Use boost, block, bury, and other adjustments to manually tune the ranking for your application’s top queries. This will go a long way towards giving the most common queries that most users are searching for the best possible search experience.

- You can also apply statistical methods and train machine learning models for these queries, since you’ll have sufficient data. That’s something you can learn more about in our Search with Machine Learning course!

Be sure to baseline your metrics before putting these practices in place. That way you can measure the impact of your changes on searcher happiness, business outcomes, and the overall search experience.

Fix frequent zero-results queries.

After you’ve optimized your most common head queries, where should you look next? Focus on zero-results queries – that is, queries that don’t return even a single search result and lead users to a dead end.

Specifically focus on frequent zero-results queries. While you may not be able to avoid all zero-results queries, you should at least be able to address the zero-results queries that account for a meaningful share of traffic.

If a query has zero results, ask yourself: are there really no documents in the search index that would be relevant to the query? If there is relevant content that you’re not returning, explore ways to adjust your retrieval strategy. Or make manual changes, such as editorially rewriting zero-results queries.

It’s also possible that the problem is not with query processing, but rather how the content is indexed. Make sure that the folks who maintain content are aware of zero-results queries, since they’ll want to know and may be in the best position to help.

Conversely, look at queries that produce too many search results. Returning too many results is often a symptom of overly aggressive retrieval that promotes recall at the expense of precision. If you’re not familiar with precision and recall, you can learn more in our Search Fundamentals course!

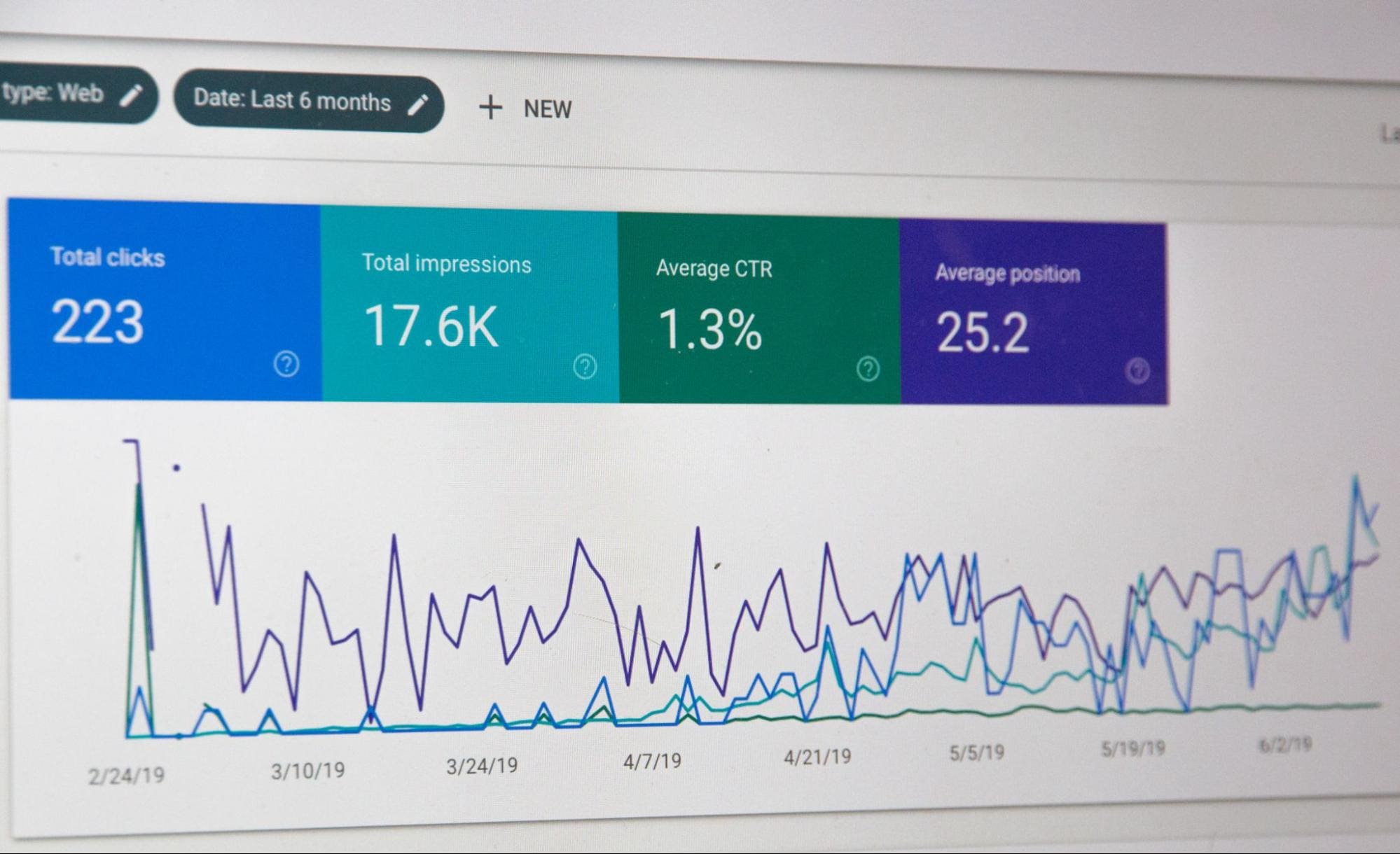

Measure search behavior.

Lord Kelvin famously said: If you don’t measure it, you can’t improve it. And if you don’t keep measuring it, you won’t be able to tell if your improvements are even working.

There are many ways to measure the success of a search application. Here are some fundamental search behavior metrics you should track:

- Search volume tells you how much traffic your search application has. You should measure the total volume of search queries as well as the number of unique users on a daily, weekly, and monthly basis. If your search volume is low, you might want to make your search box larger or more prominent

- Search latency tells you how efficiently your search application is processing queries. If the latency is too high, your users are feeling the pain! You may need to tune the application or invest in more computational resources. Also look at how many queries per second the search application is handling. A high or bursty search volume can strain your computational capacity and drive up latency, requiring you to scale your capacity to accommodate traffic spikes.

- Clickhrough rate measures how often a user clicks on any results in a set of search results. This is the leading indicator of relevance, since searchers tend to click on results that align with their search intent. You’ll also want to look at downstream metrics, such as the add-to-cart rate on an ecommerce site.

- Click position goes beyond clickthrough rate to measure the position of the result that got clicked on. Most users don’t like having to scroll or page through a lot of results to find what they’re looking for. If you don’t see most clicks concentrated in the top few results, you may need to adjust your ranking algorithm.

Metrics are key to showing return on investment for a search application. The better you can measure search behavior, the better you can justify investing in search improvements.

Monitor data quality issues.

In most organizations, there’s a very clear and rapid escalation path for when an application has performance, availability, or scalability issues. Everyone knows how to report the incident, who needs to get notified, and who needs to get to work to ensure a quick resolution.

But what about data issues? The quality of a search experience is directly connected to the application having a durable connection to fresh, timely data. This is especially important when user signals and behavior like queries and clicks are being captured and aggregated for ranking and personalization. Data quality issues are critical search application issues.

How do you detect data quality issues? Here are a couple of things you can do:

- Set up alerts for anomalous metrics. If a table usually contains 1,000,000 results but has 2,000,000 results or no results this week, that should trigger alarm bells and emails. In general, changes above some threshold (e.g., +/- 10%) are good warning signs. But don’t go overboard: if your monitoring system cries wolf too often, people will stop paying attention and will miss critical alerts.

- Implement integrity checks to ensure basic data quality and consistency. For example, fields that represent years and prices should be numbers in appropriate ranges. Fields used as join keys (e.g., category ids) should exist in corresponding tables. If you can detect data quality issues immediately, you’re less likely to have to dig your way out of them later.

Establishing proper ownership for each data source and ensuring there is an escalation path when data quality issues arise, is a good first step towards keeping the data quality of your application as robust as the other metrics like scalability and performance.

Wrangle autocomplete.

Autocomplete - also called typeahead - isn’t just a nice-to-have feature anymore. Users expect it as part of a useful, responsive search experience. Typing a few letters and having the system react immediately with relevant suggestions gives a user a fantastic first impression of the power and value of the search application they’re using.

Autocomplete not only reduces the searcher’s effort, but also guides searchers towards better-performing queries, helping to ensure a successful search experience before they’ve even done their first search.

Ideally, autocomplete should handle all head queries – that is, the most common queries that users make. In general, autocomplete should suggest queries that complete what the user has typed in so far, promoting popular queries that lead to good results and a high clickthrough rate.

Optimize the search design.

When you’re focused on the back-end internals of your search application, it’s easy to forget that what users experience most directly is the front end. Be sure to dedicate time and resources to measuring and improving the visual and tactile experience of your search application.

Some things to look into:

- Mobile experience should reflect the challenge of reading, tapping, and typing on a small screen. Developers who spend most of their day on a desktop sometimes forget that most of their users only interact with search through mobile devices.

- Size matters – specifically the size of the search box! A larger search box is not only more noticeable, but also nudges users to type longer queries. Size, color, and placement can dramatically affect search usage.

- Platforms matter! Make sure to test your application in a variety of device / operating system / browser combinations. You may discover bugs or violations of standard design patterns. Those are usually easy to fix, but you have to be aware of them first.

Back-end search developers often dismiss front-end concerns asare superficial. But front-end decisions have a critical impact on the search experience, often making an even bigger difference than decisions about retrieval and ranking. So invest your time and resources accordingly.

Bring in real human beings.

When you’re deep in development and coding, it’s easy to get lost in abstractions and hypotheticals. You can make guesses about what the mythic user persona wants to do and how they’ll behave. But those are just guesses – and you may not be in the best position to empathize with your current or future users.

The easiest way to break the spell of uninformed user expectations is to bring in real users and observe them in action. It’s amazing – and humbling – how much you can learn from observing even a handful of users struggling to use your search application and missing what you thought was obvious.

Having access to a reliable pool of users that represent your primary target audience is a quick and cheap way to see if your search application’s development is going in the right direction, especially as you prepare to release new features.

Analyze, hypothesize, experiment, iterate.

As you ponder your backlog of what to work on for your next sprint, analyze what isn’t working. Look for areas where metrics are low or have started to drop. Find groups of queries or users that are consistently underperforming. Spend an afternoon with your team looking at query or session failures, and identify likely root causes related to retrieval or ranking.

When you find problems, try to generalize them and estimate the size of the associated opportunities for improvement. With luck, you’ll discover ways to make small changes with high impact.

Take a scientific approach to solving problems. Make a hypothesis: If we change this, then we expect that to happen and we’ll know it works if this metric changes. Then experiment: perform an A/B test on a portion of your traffic to confirm or reject your hypothesis.

Finally, iterate. The more hypotheses you generate and test, the more improvements you’ll see. Better search is rarely the result of one big change. Instead, it’s the accumulation of lots of little changes, each addressing some portion of the search experience.

Ready for more?

A search team’s work is never done. A solid spirit of investigation and enthusiasm will ensure that you continue to improve and iterate on your search applications and they become more and more successful at connecting your users to the information they’re looking for.

Ready to learn more? Join our next run of Search Fundamentals and Search with Machine Learning, kicking off in October!