Chatbot Arena: The LLM Benchmark Platform

Chatbot Arena is a benchmark platform for large language models, where the community can contribute new models and evaluate them.

Image by Author

We all know that large language models (LLMs) have been taking the world by storm, and it’s been a lot to take in in such a short amount of time.

What is Chatbot Arena?

Just to shake it up a little bit more, Chatbot Arena is an LLM benchmark platform created by the Large Model Systems Organization (LMSYS Org). It is an open research organization founded by students and faculty from UC Berkeley.

Their overall aim is to make large models more accessible to everyone using a method of co-development using open datasets, models, systems, and evaluation tools. The team at LMSYS trains large language models and makes them widely available along with the development of distributed systems to accelerate the LLMs training and inference.

The Need for an LLM Benchmark

With the continuous hype around ChatGPT, there has been rapid growth in open-source LLMs that have been fine-tuned to follow specific instructions. You have examples such as Alpaca and Vicuna, which are based on LLaMA and can provide assistance with user prompts.

However, with anything this great that spurs out of control, it is difficult for the community to keep up with the constant new developments and be able to benchmark these models effectively. Benchmarking LLM assistants can be a challenge due to the possible open-ended issues.

Therefore, human evaluation is required, using pairwise comparison. Pairwise comparison is the process of comparing the models in pairs to judge which model has better performance.

How Does Chatbot Arena Work?

In the Chatbot Arena, a user can chat with two anonymous models side-by-side and make their own opinion, and vote for which model is better. Once the user has voted, the name of the model will be revealed. Users have the option to continue to chat with the two models or start afresh with two new randomly chosen anonymous models.

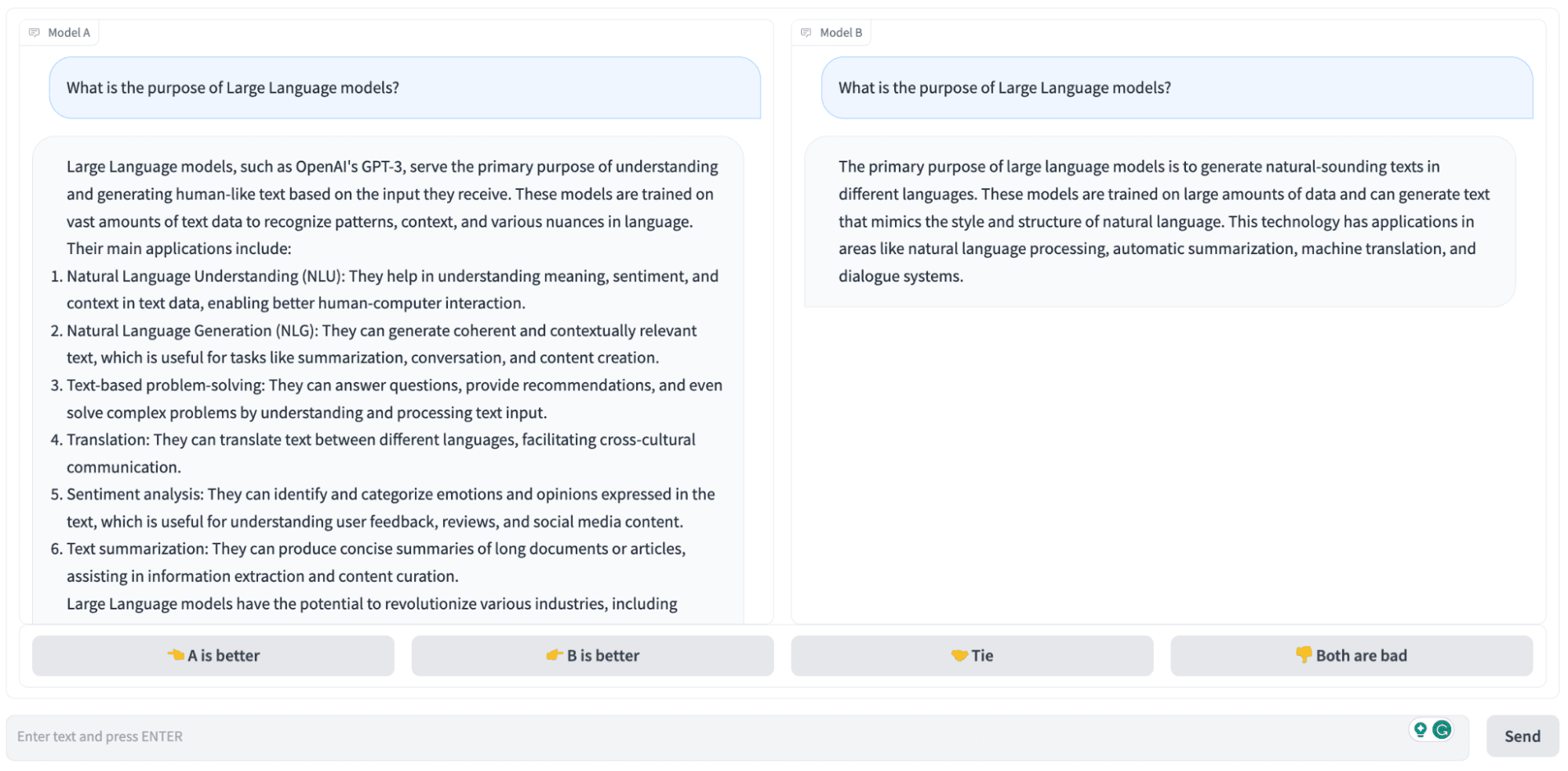

You have the option to chat with two anonymous models side-by-side or pick the models you want to chat with. Below is a screenshot example of chatting with two anonymous models, in a LLM battle!

Image Screenshot by Author

The collected data is then computed into Elo ratings and then put into the leaderboard. The Elo rating system is a method used in games such as Chess to calculate the relative skill levels of players. The difference in rating between two users acts as a predictor of the outcome of that particular match.

As of today, the 5th of May 2023, this is what the leaderboard for the Chatbot Arena looks like:

Image by Chatbot Arena

If you would like to see how this is done, you can have a look at the notebook and play around with the voting data yourself.

What a great and fun idea, right?

How Do I Get Involved?

The team at Chatbot Arena invite the entire community to join them on their LLM benchmarking quest by contributing your own models, as well as hopping into the Chatbot Arena to make your own votes on anonymous models.

Visit the Arena to vote on which model you think is better, and if you want to test out a specific model, you can follow this guide to help add it to the Chatbot Arena.

Wrapping it up

So is there more to come of Charbot Arena? According to the team, they plan to work on:

- Adding more closed-source models

- Adding more open-source models

- Releasing periodically updated leaderboards. For example, monthly

- Use better sampling algorithms, tournament mechanisms, and serving systems to support a larger number of models

- Provide a fine-tuned ranking system for different task types.

Have a play with Chatbot Arena and let us know in the comments what you think!

Nisha Arya is a Data Scientist, Freelance Technical Writer and Community Manager at KDnuggets. She is particularly interested in providing Data Science career advice or tutorials and theory based knowledge around Data Science. She also wishes to explore the different ways Artificial Intelligence is/can benefit the longevity of human life. A keen learner, seeking to broaden her tech knowledge and writing skills, whilst helping guide others.