8 Open-Source Alternative to ChatGPT and Bard

Discover the widely-used open-source frameworks and models for creating your ChatGPT like chatbots, integrating LLMs, or launching your AI product.

Image by Author

1. LLaMA

The LLaMA project encompasses a set of foundational language models that vary in size from 7 billion to 65 billion parameters. These models were training on millions of tokens, and it was training on publicly available datasets exclusively. As a result, LLaMA-13B outperforms GPT-3 (175B), and LLaMA-65B is performing similarly to the best models like Chinchilla-70B and PaLM-540B.

Image from LLaMA

Resources:

- Research Paper: LLaMA: Open and Efficient Foundation Language Models (arxiv.org)

- GitHub: facebookresearch/llama

- Demo: Baize Lora 7B

2. Alpaca

Stanford Alpaca claims that it can compete with ChatGPT and anyone can reproduce it in less than 600$. The Alpaca 7B is finetuned from the LLaMA 7B model on 52K instruction-following demonstrations.

Training recipe | Image from Stanford CRFM

Resources:

- Blog: Stanford CRFM

- GitHub: tatsu-lab/stanford_alpaca

- Demo: Alpaca-LoRA (The official demo was drop and this is a recreation of Alpaca model)

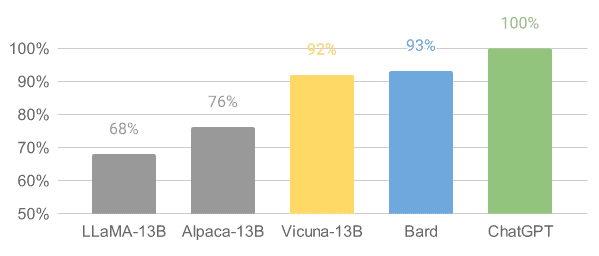

3. Vicuna

Vicuna is finetuned from the LLaMA model on user-shared conversations collected from ShareGPT. The model Vicuna-13B has achieved more than 90%* quality of OpenAI ChatGPT and Google Bard. It has also outperformed LLaMA and Stanford Alpaca models in 90% of cases. The cost of training Vicuna was around 300$.

Image from Vicuna

Resources:

- Blog post: Vicuna: An Open-Source Chatbot Impressing GPT-4 with 90%* ChatGPT Quality

- GitHub: lm-sys/FastChat

- Demo: FastChat (lmsys.org)

4. OpenChatKit

OpenChatKit: Open-Source ChatGPT Alternative is a complete tools kit for creating your chatbot. It provides instruction for training your own Instruction-tuned large language model, fine-tuning the model, extensible retrieval system for updating the bot response, and bot moderation for filtering out questions.

Image from TOGETHER

As we can see, the GPT-NeoXT-Chat-Base-20B model has outperformed base mode GPT-NoeX on question and answer, extraction, and classification tasks.

Resources:

- Blog Post: Announcing OpenChatKit — TOGETHER

- GitHub: togethercomputer/OpenChatKit

- Demo: OpenChatKit

- Model card: togethercomputer/GPT-NeoXT-Chat-Base-20B

5. GPT4ALL

GPT4ALL is a community-driven project and was trained on a massive curated corpus of assistant interactions, including code, stories, depictions, and multi-turn dialogue. The team has provided datasets, model weights, data curation process, and training code to promote open-source. Furthermore, they have released quantized 4-bit versions of the model that can run on your laptop. You can even use a Python client to run the model inference.

Gif from GPT4ALL

Resources:

- Technical Report: GPT4All

- GitHub: nomic-ai/gpt4al

- Demo: GPT4All (non-official)

- Model card: nomic-ai/gpt4all-lora · Hugging Face

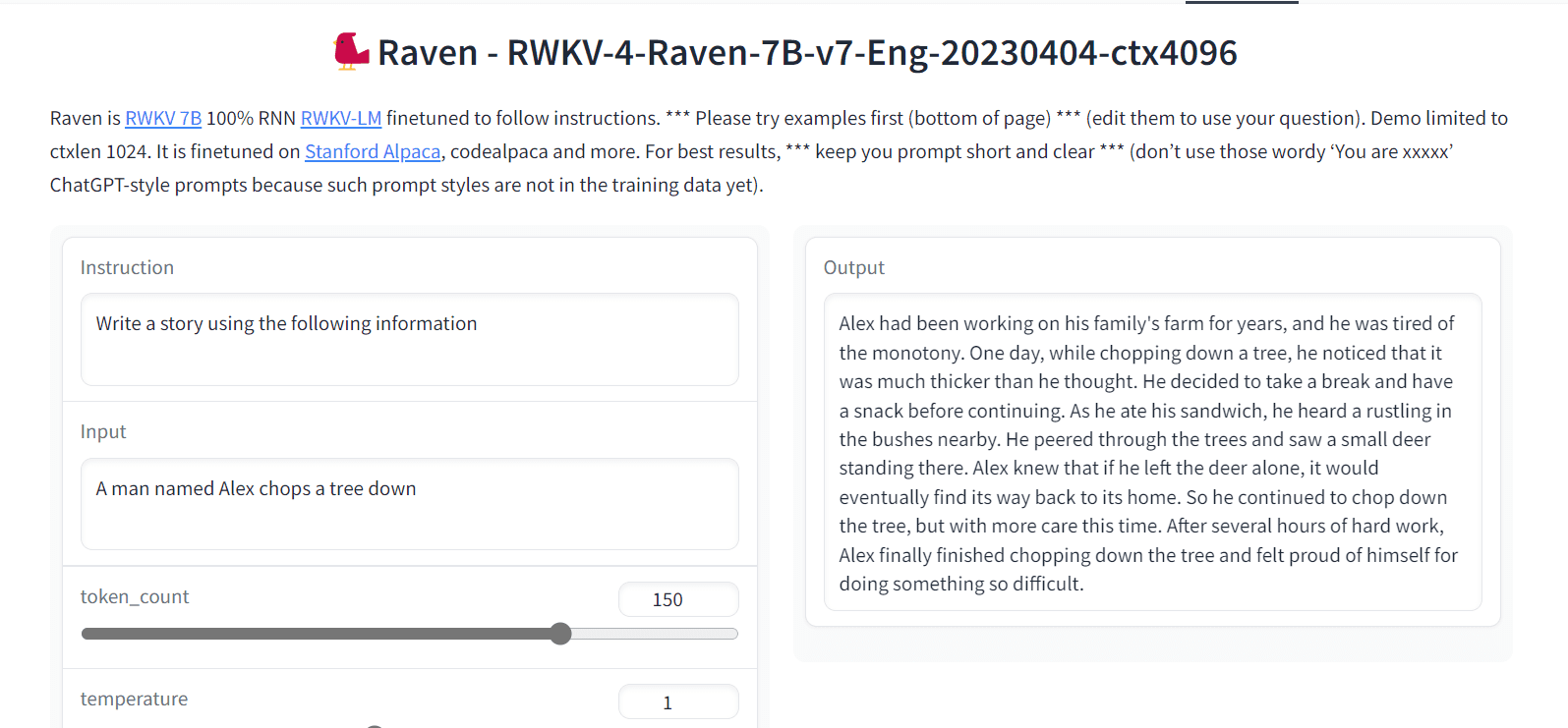

6. Raven RWKV

Raven RWKV 7B is an open-source chatbot that is powered by the RWKV language model that produces similar results to ChatGPT. The model uses RNNs that can match transformers in quality and scaling while being faster and saving VRAM. The Raven was fine-tuned on Stanford Alpaca, code-alpaca, and more datasets.

Image from Raven RWKV 7B

Resources:

- GitHub: BlinkDL/ChatRWKV

- Demo: Raven RWKV 7B

- Model card: BlinkDL/rwkv-4-raven

7. OPT

OPT: Open Pre-trained Transformer Language Models is not great as ChatGPT, but it has shown remarkable capabilities for zero- and few-shot learning and Stereotypical Bias analysis. You can also integrate it with Alpa, Colossal-AI, CTranslate2, and FasterTransformer to get even better results.

Note: It is on the list because of its popularity, as it has 624,710 monthly downloads in the text generation category.

Image from (arxiv.org)

Resources:

- Research Paper: OPT: Open Pre-trained Transformer Language Models (arxiv.org)

- GitHub: facebookresearch/metaseq

- Demo: A Watermark for LLMs

- Model card: facebook/opt-1.3b

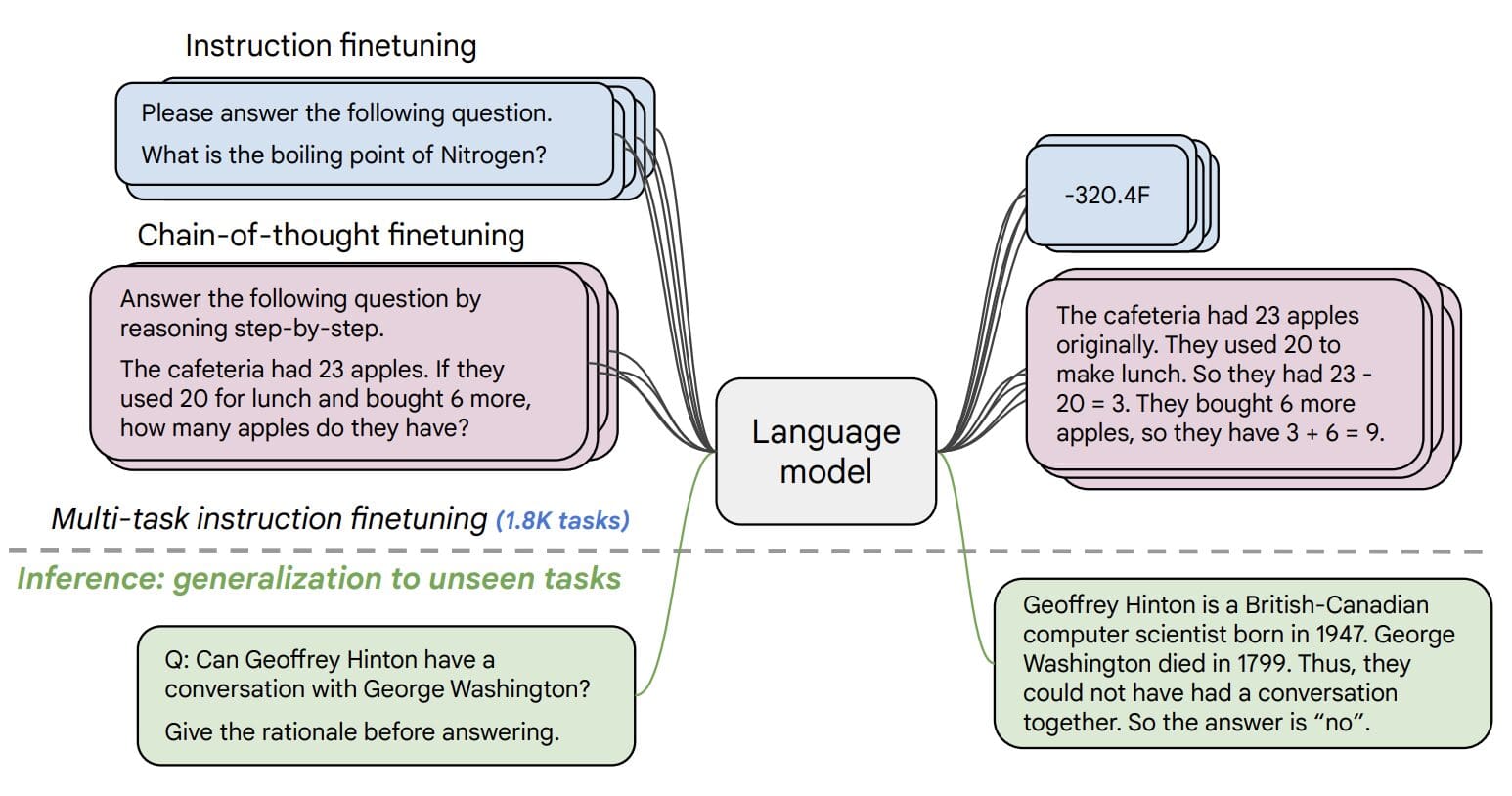

8. Flan-T5-XXL

Flan-T5-XXL fine-tuned T5 models on a collection of datasets phrased as instructions. The instruction fine-tuning dramatically improves performance on a variety of model classes such as PaLM, T5, and U-PaLM. The Flan-T5-XXL model is fine-tuned on more than 1000 additional tasks covering also more languages.

Image from Flan-T5-XXL

Resources:

- Research Paper: Scaling Instruction-Fine Tuned Language Models

- GitHub: google-research/t5x

- Demo: Chat Llm Streaming

- Model card: google/flan-t5-xxl

Conclusion

There are many open-source options available, and I have mentioned popular ones. The open-source chatbots and models are getting better, and in the next few months, you will see a new model that can completely overtake ChatGPT in terms of performance.

In this blog, I have provided a list of models/chatbot frameworks that can help you train and build chatbots similar to ChatGPT and GPT-4. Don’t forget to give them likes and stars.

Do let me know if you have better suggestions in the comment section. I would love to add it in the future.

Abid Ali Awan (@1abidaliawan) is a certified data scientist professional who loves building machine learning models. Currently, he is focusing on content creation and writing technical blogs on machine learning and data science technologies. Abid holds a Master's degree in Technology Management and a bachelor's degree in Telecommunication Engineering. His vision is to build an AI product using a graph neural network for students struggling with mental illness.