Building an image search service from scratch

By the end of this post, you should be able to build a quick semantic search model from scratch, no matter the size of your dataset.

Pages: 1 2

Text -> Text

Not so different after all

Embeddings for text

Taking a detour to the world of natural language processing (NLP), we can use a similar approach to index and search for words.

We loaded a set of pre-trained vectors from GloVe, which were obtained by crawling through all of Wikipedia and learning the semantic relationships between words in that dataset.

Just like before, we will create an index, this time containing all of the GloVe vectors. Then, we can search our embeddings for similar words.

Searching for said, for example, returns this list of [word, distance]:

['said', 0.0]['told', 0.688713550567627]['spokesman', 0.7859575152397156]['asked', 0.872875452041626]['noting', 0.9151610732078552]['warned', 0.915908694267273]['referring', 0.9276227951049805]['reporters', 0.9325974583625793]['stressed', 0.9445104002952576]['tuesday', 0.9446316957473755]

This seems very reasonable, most words are quite similar in meaning to our original word, or represent an appropriate concept. The last result (tuesday) also shows that this model is far from perfect, but it will get us started. Now, let’s try to incorporate both words and images in our model.

A sizable issue

Using the distance between embeddings as a method for search is a pretty general method, but our representations for words and images seem incompatible. The embeddings for images are of size 4096, while those for words are of size 300 — how could we use one to search for the other? In addition, even if both embeddings were the same size, they were trained in a completely different fashion, so it is incredibly unlikely that images and related words would happen to have the same embeddings randomly. We need to train a joint model.

Image <-> Text

Worlds collide

Let’s now create a hybrid model that can go from words to images and vice versa.

For the first time in this tutorial, we will actually be training our own model, drawing inspiration from a great paper called DeViSE. We will not be re-implementing it exactly, though we will heavily lean on its main ideas. (For another slightly different take on the paper, check out fast.ai’s implementation in their lesson 11.)

The idea is to combine both representations by re-training our image model and changing the type of its labels.

Usually, image classifiers are trained to pick a category out of many (1000 for Imagenet). What this translates to is that — using the example of Imagenet — the last layer is a vector of size 1000 representing the probability of each class. This means our model has no semantic understanding of which classes are similar to others: classifying an image of a cat as a dog results in as much of an error as classifying it as an airplane.

For our hybrid model, we replace this last layer of our model with the word vector of our category. This allows our model to learn to map the semantics of images to the semantics of words, and means that similar classes will be closer to each other (as the word vector for cat is closer to dog than airplane). Instead of a target of size 1000, with all zeros except for a one, we will predict a semantically rich word vector of size 300.

We do this by adding two dense layers:

- One intermediate layer of size 2000

- One output layer of size 300 (the size of GloVe’s word vectors).

Here is what the model looked like when it was trained on Imagenet:

Here is what it looks like now:

Training the model

We then re-train our model on a training split of our dataset, to learn to predict the word vector associated with the label of the image. For an image with the category cat for example, we are trying to predict the 300-length vector associated with cat.

This training takes a bit of time, but is still much faster than on Imagenet. For reference, it took about 6–7 hours on my laptop that has no GPU.

It is important to note how ambitious this approach is. The training data we are using here (80% of our dataset, so 800 images) is minuscule, compared to usual datasets (Imagenet has a million images, 3 orders of magnitude more). If we were using a traditional technique of training with categories, we would not expect our model to perform very well on the test set, and would certainly not expect it to perform at all on completely new examples.

Once our model is trained, we have our GloVe word index from above, and we build a new fast index of our image features by running all images in our dataset through it, saving it to disk.

Tagging

We can now easily extract tags from any image by simply feeding our image to our trained network, saving the vector of size 300 that comes out of it, and finding the closest words in our index of English words from GloVe. Let’s try with this image — it’s in the bottle class, though it contains a variety of items.

Here are the generated tags:

[6676, 'bottle', 0.3879561722278595][7494, 'bottles', 0.7513495683670044][12780, 'cans', 0.9817070364952087][16883, 'vodka', 0.9828150272369385][16720, 'jar', 1.0084964036941528][12714, 'soda', 1.0182772874832153][23279, 'jars', 1.0454961061477661][3754, 'plastic', 1.0530102252960205][19045, 'whiskey', 1.061428427696228][4769, 'bag', 1.0815287828445435]

That’s a pretty amazing result, as most of the tags are very relevant. This method still has room to grow, but it picks up on most of the items in the image fairly well. The model learns to extract many relevant tags, even from categories that it was not trained on!

Searching for images using text

Most importantly, we can use our joint embedding to search through our image database using any word. We simply need to get our pre-trained word embedding from GloVe, and find the images that have the most similar embeddings (which we get by running them through our model).

Generalized image search with minimal data.

Let’s start first with a word that was actually in our training set by searching for dog:

Results for search term “

dog"OK, pretty good results — but we could get this from any classifier that was trained just on the labels! Let’s try something harder by searching for the keyword ocean, which is not in our dataset.

Results for search term “

ocean"That’s awesome — our model understands that ocean is similar to water, and returns many items from the boat class.

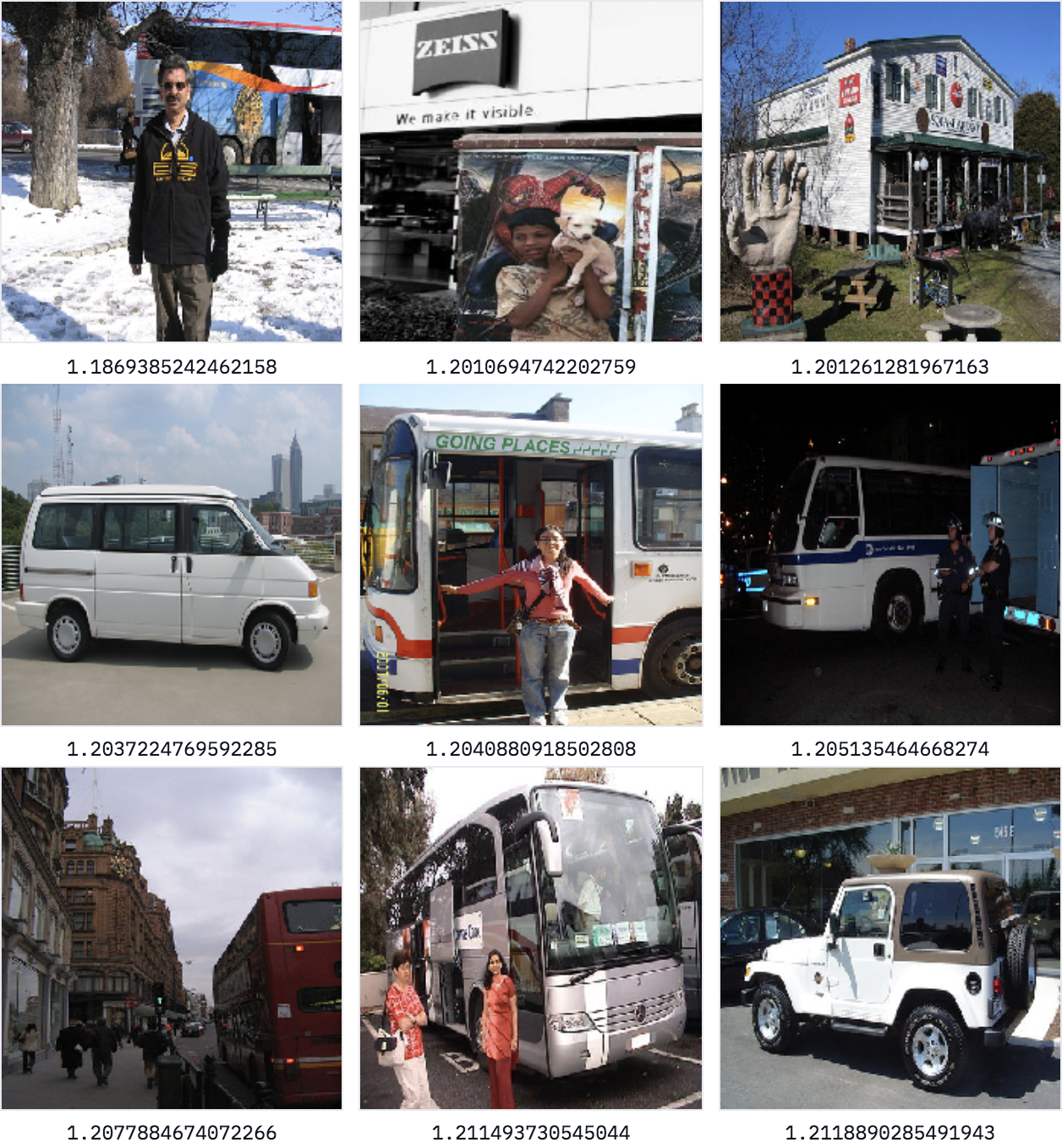

What about searching for street?

Results for search term “

street"Here, our images returned come from plenty of classes (car, dog, bicycles, bus, person), yet most of them contain or are near a street, despite us having never used that concept when training our model. Because we are leveraging outside knowledge through pre-trained word vectors to learn a mapping from images to vectors that is more semantically rich than a simple category, our model can generalize well to outside concepts.

Beyond words

The English language has come far, but not far enough to invent a word for everything. For example, at the time of publishing this article, there is no English word for “a cat lying on a sofa,” which is a perfectly valid query to type into a search engine. If we want to search for multiple words at the same time, we can use a very simple approach, leveraging the arithmetic properties of word vectors. It turns out that summing two word vectors generally works very well. So if we were to just search for our images by using the average word vector for cat and sofa, we could hope to get images that are very cat-like, very sofa-like, or have a cat on a sofa.

Getting a combined embedding for multiple words

Let’s try using this hybrid embedding and searching!

Results for search term “

cat"+"sofa"This is a fantastic result, as most those images contain some version of a furry animal and a sofa (I especially enjoy the leftmost image on the second row, which seems like a bag of furriness next to a couch)! Our model, which was only trained on single words, can handle combinations of two words. We have not built Google Image Search yet, but this is definitely impressive for a relatively simple architecture.

This method can actually extend quite naturally to a variety of domains (see this example for a joint code-English embedding), so we’d love to hear about what you end up applying it to.

Conclusion

I hope you found this post informative, and that it has demystified some of the world of content-based recommendation and semantic search. If you have any questions or comments, or want to share what you built using this tutorial, reach out to me on Twitter!

Want to learn applied Artificial Intelligence from top professionals in Silicon Valley or New York? Learn more about the Artificial Intelligenceprogram.

Are you a company working in AI and would like to get involved in the Insight AI Fellows Program? Feel free to get in touch.

Thanks to Stephanie Mari, Bastian Haase, Adrien Treuille, and Matthew Rubashkin.

Bio: Emmanuel Ameisen (@EmmanuelAmeisen) is Head of AI at Insight Data Science.

Original. Reposted with permission.

Related:

- How to solve 90% of NLP problems: a step-by-step guide

- Implementing ResNet with MXNET Gluon and Comet.ml for Image Classification

- Solve any Image Classification Problem Quickly and Easily

- Building a Visual Search Engine - Part 2: The Search Engine

- Hyperparameter Tuning Using Grid Search and Random Search in Python

- Elevate Your Search Engine Skills with Uplimit's Search with ML Course!

- Building a Visual Search Engine - Part 1: Data Exploration

- 311 Call Centre Performance: Rating Service Levels

- How to Train a BERT Model From Scratch

Pages: 1 2